Deep Residual Autoencoder with Multiscaling for Semantic Segmentation of Land-Use Images

Abstract

:1. Introduction

2. Related Work

2.1. Residual Learning

2.2. Multiscaling and ASPP

2.3. Semantic Segmentation via Deep Learning

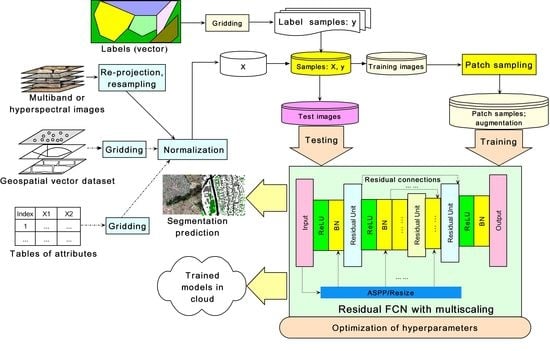

3. Deep Residual Autoencoder with Multiscaling

3.1. Autoencoder-Based Architecture

3.2. Two Types of Residual Connections

3.3. Incorporation of Atrous Convolutions and Multiscaling

3.4. Sampling of the Training Set and Boundary Effects

3.5. Metrics and Loss Functions

3.6. Implementation

4. Experimental Datasets and Evaluation

4.1. Two Datasets

4.2. Training and Evaluation

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Edelman, S.; Poggio, T. Integrating visual cues for object segmentation and recognition. Opt. News 1989, 15, 8–13. [Google Scholar] [CrossRef]

- Ohta, Y.-I.; Kanade, T.; Sakai, T. An analysis system for scenes containing objects with substructures. In Proceedings of the Fourth International Joint Conference on Pattern Recognitions, Kyoto, Japan, 7–10 November 1978; pp. 752–754. [Google Scholar]

- Ma, L.; Liu, Y.; Zhang, X.L.; Ye, Y.X.; Yin, G.F.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Yang, G.W.; Luo, Q.; Yang, Y.D.; Zhuang, Y. Deep Learning and Machine Learning for Object Detection in Remote Sensing Images. Lect. Notes Electr. Eng. 2018, 473, 249–256. [Google Scholar]

- Zhu, X.X.; Tuia, D.; Mou, L.C.; Xia, G.S.; Zhang, L.P.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Bishop, M.C. Neural Networks for Pattern Recognition; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Deng, L.; Yu, D. Deep learning: Methods and applications. Found. Trends® Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Saunders, A.; Oldenburg, I.A.; Berezovskii, V.K.; Johnson, C.A.; Kingery, N.D.; Elliott, H.L.; Xie, T.; Gerfen, C.R.; Sabatini, B.L. A direct GABAergic output from the basal ganglia to frontal cortex. Nature 2015, 521, 85–89. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:170406857. [Google Scholar]

- Yu, H.S.; Yang, Z.G.; Tan, L.; Wang, Y.N.; Sun, W.; Sun, M.G.; Tang, Y.D. Methods and datasets on semantic segmentation: A review. Neurocomputing 2018, 304, 82–103. [Google Scholar] [CrossRef]

- Ker, J.; Wang, L.P.; Rao, J.; Lim, T. Deep Learning Applications in Medical Image Analysis. IEEE Access 2018, 6, 9375–9389. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sanchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:151107122. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:14127062. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. Refinenet: Multi-path refinement networks for high-resolution semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1925–1934. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Peng, C.; Zhang, X.; Yu, G.; Luo, G.; Sun, J. Large Kernel Matters--Improve Semantic Segmentation by Global Convolutional Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4353–4361. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:170605587. [Google Scholar]

- Panboonyuen, T.; Jitkajornwanich, K.; Lawawirojwong, S.; Srestasathiern, P.; Vateekul, P. Semantic Segmentation on Remotely Sensed Images Using an Enhanced Global Convolutional Network with Channel Attention and Domain Specific Transfer Learning. Remote Sens. 2019, 11, 83. [Google Scholar] [CrossRef]

- Kang, M.; Lin, Z.; Leng, X.G.; Ji, K.F. A Modified Faster R-CNN Based on CFAR Algorithm for SAR Ship Detection. In Proceedings of the 2017 International Workshop on Remote Sensing with Intelligent Processing (Rsip 2017), Shanghai, China, 18–21 May 2017. [Google Scholar]

- Nieto-Hidalgo, M.; Gallego, A.J.; Gil, P.; Pertusa, A. Two-Stage Convolutional Neural Network for Ship and Spill Detection Using SLAR Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5217–5230. [Google Scholar] [CrossRef] [Green Version]

- Yu, X.R.; Zhang, H.; Luo, C.B.; Qi, H.R.; Ren, P. Oil Spill Segmentation via Adversarial f-Divergence Learning. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4973–4988. [Google Scholar] [CrossRef]

- Kaiser, P.; Wegner, J.D.; Lucchi, A.; Jaggi, M.; Hofmann, T.; Schindler, K. Learning Aerial Image Segmentation From Online Maps. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6054–6068. [Google Scholar] [CrossRef]

- Zhang, Z.X.; Liu, Q.J.; Wang, Y.H. Road Extraction by Deep Residual U-Net. IEEE Trans. Geosci. Remote Sens. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Zhang, L.P.; Zhang, L.F.; Du, B. Deep Learning for Remote Sensing Data A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Tobler, W. A computer movie simulating urban growth in the Detroit region. Econ. Geogr. 1970, 46, 234–240. [Google Scholar] [CrossRef]

- Krähenbühl, P.; Koltun, V. Efficient inference in fully connected crfs with gaussian edge potentials. arXiv 2011, arXiv:1210.5644. [Google Scholar]

- Pan, X.; Zhao, J. High-resolution remote sensing image classification method based on convolutional neural network and restricted conditional random field. Remote Sens. 2018, 10, 920. [Google Scholar] [CrossRef]

- Yang, Y.; Stein, A.; Tolpekin, V.A.; Zhang, Y. High-Resolution Remote Sensing Image Classification Using Associative Hierarchical CRF Considering Segmentation Quality. IEEE Geosci. Remote Sens. Lett. 2018, 15, 754–758. [Google Scholar] [CrossRef]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- He, K.M.; Zhang, X.Y.; Ren, S.Q.; Sun, J. Identity Mappings in Deep Residual Networks. Lect. Notes Comput. Sci. 2016, 9908, 630–645. [Google Scholar]

- Li, L.F. Geographically Weighted Machine Learning and Downscaling for High-Resolution Spatiotemporal Estimations of Wind Speed. Remote Sens. 2019, 11, 1378. [Google Scholar] [CrossRef]

- Li, L.; Fang, Y.; Wu, J.; Wang, C.; Ge, Y. Autoencoder based deep residual networks for robust regression and spatiotemporal estimation. IEEE Trans. Nerual Netw. Learn. Syst. 2019. under review. [Google Scholar]

- Dstl Satellite Imagery Feature Detection. Available online: https://www.kaggle.com/c/dstl-satellite-imagery-feature-detection/overview/description (accessed on 1 December 2018).

- Volpi, M.; Ferrari, V. Semantic segmentation of urban scenes by learning local class interactions. In Proceedings of the IEEE CVPR 2015 Workshop “Looking from above: When Earth observation meets vision” (EARTHVISION), Boston, MA, USA, 12 June 2015. [Google Scholar]

- Zelikowsky, M.; Bissiere, S.; Hast, T.A.; Bennett, R.Z.; Abdipranoto, A.; Vissel, B.; Fanselow, M.S. Prefrontal microcircuit underlies contextual learning after hippocampal loss. Proc. Natl. Acad. Sci. USA 2013, 110, 9938–9943. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Srivastava, K.R.; Greff, K.; Schmidhuber, J. Highway networks. arXiv 2015, arXiv:1505.00387. [Google Scholar]

- Jegou, H.; Perronnin, F.; Douze, M.; Sanchez, J.; Perez, P.; Schmid, C. Aggregating local image descriptors into compact codes. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 1704–1716. [Google Scholar] [CrossRef] [PubMed]

- Szeliski, R. Locally adapted hierarchical basis preconditioning. In Proceedings of the SIGGRAPH’06, Boston, MA, USA, 30 July–3 August 2006. [Google Scholar]

- Veit, A.; Wilber, M.; Belongie, S. Residual networks behave like ensembles of relatively shallow networks. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 550–558. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Alexandre, D.; Chang, C.-P.; Peng, W.-H.; Hang, H.-M. An Autoencoder-based Learned Image Compressor: Description of Challenge Proposal by NCTU. In Proceedings of the CVPR Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2539–2542. [Google Scholar]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated residual networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 472–480. [Google Scholar]

- Taylor, G.W.; Fergus, R.; LeCun, Y.; Bregler, C. Convolutional learning of spatio-temporal features. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; pp. 140–153. [Google Scholar]

- Lea, C.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal convolutional networks: A unified approach to action segmentation. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 47–54. [Google Scholar]

- Zhang, J.; Zheng, Y.; Qi, D. Deep spatio-temporal residual networks for citywide crowd flows prediction. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Zhang, R.; Li, N.; Huang, S.; Xie, P.; Jiang, H. Automatic Prediction of Traffic Flow Based on Deep Residual Networks. In International Conference on Mobile Ad-Hoc and Sensor Networks; Springer: Berlin/Heidelberg, Germany, 2017; pp. 328–337. [Google Scholar]

- Xi, G.; Yin, L.; Li, Y.; Mei, S. A Deep Residual Network Integrating Spatial-temporal Properties to Predict Influenza Trends at an Intra-urban Scale. In Proceedings of the 2nd ACM SIGSPATIAL International Workshop on AI for Geographic Knowledge Discovery, Seattle, WA, USA, 6–9 November 2018; pp. 19–28. [Google Scholar]

- Tran, L.; Liu, X.; Zhou, J.; Jin, R. Missing modalities imputation via cascaded residual autoencoder. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1405–1414. [Google Scholar]

- Raj, A.; Maturana, D.; Scherer, S. Multi-Scale Convolutional Architecture for Semantic Segmentation; Tech Rep CMU-RITR-15-21; Robotics Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2015. [Google Scholar]

- Roy, A.; Todorovic, S. A multi-scale cnn for affordance segmentation in rgb images. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 186–201. [Google Scholar]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In Proceedings of the IEEE International Conference on Computer Vision 2015, Santiago, Chile, 7–13 December 2015; pp. 2650–2658. [Google Scholar]

- Bian, X.; Lim, S.N.; Zhou, N. Multiscale fully convolutional network with application to industrial inspection. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Placid, NY, USA, 7–10 March 2016; pp. 1–8. [Google Scholar]

- Raza, S.E.A.; Cheung, L.; Shaban, M.; Graham, S.; Epstein, D.; Pelengaris, S.; Khan, M.; Rajpoot, N.M. Micro-Net: A unified model for segmentation of various objects in microscopy images. Med. Image Anal. 2019, 52, 160–173. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.; Wu, J.-N.; Wu, Y.; Zhou, X. Exploiting local structures with the kronecker layer in convolutional networks. arXiv 2015, arXiv:151209194. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Fink, M.; Perona, P. Mutual boosting for contextual inference. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2004; pp. 1515–1522. [Google Scholar]

- Shotton, J.; Johnson, M.; Cipolla, R. Semantic texton forests for image categorization and segmentation. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Fulkerson, B.; Vedaldi, A.; Soatto, S. Class segmentation and object localization with superpixel neighborhoods. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 670–677. [Google Scholar]

- Silberman, N.; Fergus, R. Indoor scene segmentation using a structured light sensor. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 601–608. [Google Scholar]

- Kohli, P.; Torr, P.H. Robust higher order potentials for enforcing label consistency. Int. J. Comput. Vis. 2009, 82, 302–324. [Google Scholar] [CrossRef]

- Torralba, A.; Murphy, K.P.; Freeman, W.T. Contextual models for object detection using boosted random fields. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2005; pp. 1401–1408. [Google Scholar]

- Farabet, C.; Couprie, C.; Najman, L.; LeCun, Y. Learning hierarchical features for scene labeling. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1915–1929. [Google Scholar] [CrossRef] [PubMed]

- Alvarez, J.M.; LeCun, Y.; Gevers, T.; Lopez, A.M. Semantic road segmentation via multi-scale ensembles of learned features. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2012; pp. 586–595. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision 2015, Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Zheng, S.; Jayasumana, S.; Romera-Paredes, B.; Vineet, V.; Su, Z.; Du, D.; Huang, C.; Torr, P.H. Conditional random fields as recurrent neural networks. In Proceedings of the IEEE International Conference on Computer Vision 2015, Santiago, Chile, 7–13 December 2015; pp. 1529–1537. [Google Scholar]

- Arnab, A.; Jayasumana, S.; Zheng, S.; Torr, P.H. Higher order conditional random fields in deep neural networks. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 524–540. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the NIPS 2012, Lake Tahoe, NV, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C. Going deeper with convolutions. In Proceedings of the CVPR 2015, Boston, MA, USA, 8–10 June 2015; pp. 1–9. [Google Scholar]

- Liou, C.-Y.; Huang, J.-C.; Yang, W.-C. Modeling word perception using the Elman network. Neurocomputing 2008, 71, 3150–3157. [Google Scholar] [CrossRef]

- Liou, C.-Y.; Cheng, W.-C.; Liou, J.-W.; Liou, D.-R. Autoencoder for words. Neurocomputing 2014, 139, 84–96. [Google Scholar] [CrossRef]

- Jolliffe, I. Principal Component Analysis; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Fang, Y.; Li, L. Estimation of high-precision high-resolution meteorological factors based on machine learning. J. Geo-Inf. Sci. 2019. in press (In Chinese) [Google Scholar]

- Baydin, A.G.; Pearlmutter, B.; Radul, A.A.; Siskind, J. Automatic differentiation in machine learning: A survey. J. Mach. Learn. Res. 2018, 18, 1–43. [Google Scholar]

- Papandreou, G.; Kokkinos, I.; Savalle, P.-A. Modeling local and global deformations in deep learning: Epitomic convolution, multiple instance learning, and sliding window detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 390–399. [Google Scholar]

- Iglovikov, V.; Mushinskiy, S.; Osin, V. Satellite imagery feature detection using deep convolutional neural network: A kaggle competition. arXiv 2017, arXiv:170606169. [Google Scholar]

- Bishop, M.C. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Padwick, C.; Deskevich, M.; Pacifici, F.; Smallwood, S. WorldView-2 pan-sharpening. In Proceedings of the ASPRS 2010 Annual Conference, San Diego, CA, USA, 26–30 April 2010. [Google Scholar]

- Rhu, M.; Gimelshein, N.; Clemons, J.; Zulfiqar, A.; Keckler, S.W. vDNN: Virtualized deep neural networks for scalable, memory-efficient neural network design. In Proceedings of the 49th Annual IEEE/ACM International Symposium on Microarchitecture, Taipei, Taiwan, 15–19 October 2016; p. 18. [Google Scholar]

- Ge, Y.; Jin, Y.; Stein, A.; Chen, T.; Wang, J.; Wang, J.; Cheng, Q.; Bai, H.; Liu, M.; Atkinson, P. Principles and methods of scaling geospatial Earth science data. Earth-Sci. Rev. 2019, 197, 102897. [Google Scholar] [CrossRef]

- Hoberg, T.; Rottensteiner, F.; Feitosa, R.Q.; Heipke, C. Conditional random fields for multitemporal and multiscale classification of optical satellite imagery. IEEE Trans. Geosci. Remote Sens. 2014, 53, 659–673. [Google Scholar] [CrossRef]

| Dataset | Class | Input Size | Output Size | Oversampling Distance | Undersampling Distance | Number of Samples |

|---|---|---|---|---|---|---|

| DSTL | Crops | 256 | 224 | 220 | 350 | 2560 |

| Buildings | 256 | 224 | 150 | 400 | 2138 | |

| Trees | 256 | 224 | 150 | 400 | 2484 | |

| Roads | 256 | 224 | 120 | 400 | 2039 | |

| Vehicles | 256 | 224 | 100 | 450 | 2219 | |

| Zurich | All classes | 256 | 224 | 150 | - | 2840 |

| Dataset | Class | Model | #Par (million) a | Training | Validation | Testing | |||

|---|---|---|---|---|---|---|---|---|---|

| PA b | JI/ MIoU c | PA | JI/ MIoU | PA | JI/ MIoU | ||||

| DSTL | Crops | Baseline d | 31 | 0.88 | 0.85 | 0.89 | 0.79 | 0.89 | 0.87 |

| Residual e | 28 | 0.90 | 0.87 | 0.90 | 0.80 | 0.91 | 0.88 | ||

| Res+resizing f | 31 | 0.91 | 0.88 | 0.90 | 0.81 | 0.92 | 0.89 | ||

| Res+ASPPg | 29 | 0.94 | 0.93 | 0.91 | 0.83 | 0.93 | 0.91 | ||

| Buildings | Baseline | 31 | 0.95 | 0.72 | 0.95 | 0.77 | 0.95 | 0.72 | |

| Residual | 28 | 0.95 | 0.72 | 0.95 | 0.78 | 0.95 | 0.72 | ||

| Res+resizing | 31 | 0.95 | 0.72 | 0.95 | 0.77 | 0.95 | 0.72 | ||

| Res+ASPP | 29 | 0.96 | 0.76 | 0.96 | 0.80 | 0.96 | 0.75 | ||

| Trees | Baseline | 31 | 0.93 | 0.56 | 0.93 | 0.61 | 0.93 | 0.53 | |

| Residual | 28 | 0.94 | 0.59 | 0.94 | 0.62 | 0.94 | 0.55 | ||

| Res+resizing | 31 | 0.94 | 0.61 | 0.94 | 0.65 | 0.94 | 0.58 | ||

| Res+ASPP | 29 | 0.94 | 0.61 | 0.94 | 0.64 | 0.94 | 0.57 | ||

| Roads | Baseline | 31 | 0.97 | 0.71 | 0.97 | 0.74 | 0.97 | 0.67 | |

| Residual | 28 | 0.98 | 0.81 | 0.97 | 0.81 | 0.97 | 0.74 | ||

| Res+resizing | 31 | 0.97 | 0.73 | 0.97 | 0.74 | 0.97 | 0.68 | ||

| Res+ASPP | 29 | 0.97 | 0.76 | 0.97 | 0.77 | 0.97 | 0.69 | ||

| Vehicles | Baseline | 31 | 0.99 | 0.69 | 0.99 | 0.88 | 0.99 | 0.69 | |

| Residual | 28 | 0.99 | 0.78 | 0.99 | 0.92 | 0.99 | 0.78 | ||

| Res+resizing | 31 | 0.99 | 0.69 | 0.99 | 0.88 | 0.99 | 0.72 | ||

| Res+ASPP | 29 | 0.99 | 0.72 | 0.99 | 0.90 | 0.99 | 0.69 | ||

| Zurich | All classes | Baseline | 31 | 0.88 | 0.78 | 0.86 | 0.78 | 0.87 | 0.69 |

| Residual | 28 | 0.94 | 0.89 | 0.91 | 0.86 | 0.92 | 0.74 | ||

| Res+resizing | 31 | 0.88 | 0.80 | 0.87 | 0.81 | 0.87 | 0.71 | ||

| Res+ASPP | 29 | 0.89 | 0.80 | 0.88 | 0.80 | 0.88 | 0.72 | ||

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L. Deep Residual Autoencoder with Multiscaling for Semantic Segmentation of Land-Use Images. Remote Sens. 2019, 11, 2142. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11182142

Li L. Deep Residual Autoencoder with Multiscaling for Semantic Segmentation of Land-Use Images. Remote Sensing. 2019; 11(18):2142. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11182142

Chicago/Turabian StyleLi, Lianfa. 2019. "Deep Residual Autoencoder with Multiscaling for Semantic Segmentation of Land-Use Images" Remote Sensing 11, no. 18: 2142. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11182142