Mapping Landslides on EO Data: Performance of Deep Learning Models vs. Traditional Machine Learning Models

Abstract

:1. Introduction

2. Mapping Landslides on EO with Machine Learning

2.1. Pixel-Based Methods

2.2. Object-Based Methods

2.3. Deep-Learning Methods

3. Methodology

3.1. Pixel-Based

3.2. Object-Based

3.3. Deep-Learning

4. Study Area

Dataset

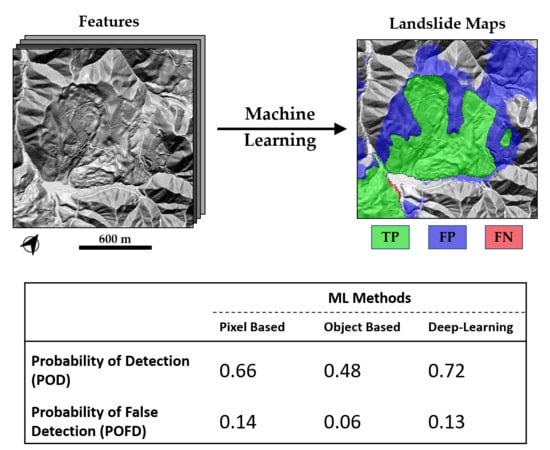

5. Results

5.1. Model Assessment Parameters

5.2. Performance Evaluation in the Testing Area

6. Discussions

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cruden, D.M. A simple definition of a landslide. Bull. Eng. Geol. Environ. 1991, 43, 27–29. [Google Scholar] [CrossRef]

- Turner, A.K. Social and environmental impacts of landslides. Innov. Infrastruct. Solut. 2018, 3, 70. [Google Scholar] [CrossRef]

- Dilley, M.; Chen, R.S.; Deichmann, U.; Lerner-Lam, A.; Arnold, M.; Agwe, J.; Buys, P.; Kjekstad, O.; Lyon, B.; Yetman, G. Natural Disaster Hotspots: A Global Risk Analysis (English); World Bank: Washington, DC, USA, 2005; pp. 1–132. [Google Scholar]

- Froude, M.J.; Petley, D.N. Global fatal landslide occurrence from 2004 to 2016. Hazards Earth Syst. Sci. 2018, 18, 2161–2181. [Google Scholar] [CrossRef] [Green Version]

- Cooper, A.H. The classification, recording, databasing and use of information about building damage caused by subsidence and landslides. Q. J. Eng. Geol. Hydrogeol. 2008, 41, 409–424. [Google Scholar] [CrossRef] [Green Version]

- Tomás, R.; Abellán, A.; Cano, M.; Riquelme, A.; Tenza-Abril, A.J.; Baeza-Brotons, F.; Saval, J.M.; Jaboyedoff, M. A multidisciplinary approach for the investigation of a rock spreading on an urban slope. Landslides 2018, 15, 199–217. [Google Scholar] [CrossRef] [Green Version]

- Casagli, N.; Cigna, F.; Bianchini, S.; Hölbling, D.; Füreder, P.; Righini, G.; Del Conte, S.; Friedl, B.; Schneiderbauer, S.; Iasio, C.; et al. Landslide mapping and monitoring by using radar and optical remote sensing: Examples from the EC-FP7 project SAFER. Remote Sens. Appl. Soc. Environ. 2016, 4, 92–108. [Google Scholar] [CrossRef] [Green Version]

- Stead, D.; Wolter, A. A critical review of rock slope failure mechanisms: The importance of structural geology. J. Struct. Geol. 2015, 74, 1–23. [Google Scholar] [CrossRef]

- Pike, R.J. The geometric signature: Quantifying landslide-terrain types from digital elevation models. Math. Geol. 1988, 20, 491–511. [Google Scholar] [CrossRef]

- Soeters, R.; van Westen, C.J. Slope instability recognition, analysis and zonation. In Landslides Investigation and Mitigation (Transportation Research Board, National Research Council, Special Report 247); Turner, A.K., Schuster, R.L., Eds.; National Academy Press: Washington, DC, USA, 1996; pp. 129–177. [Google Scholar]

- Van Westen, C.J.; Castellanos, E.; Kuriakose, S.L. Spatial data for landslide susceptibility, hazard, and vulnerability assessment: An overview. Eng. Geol. 2008, 102, 112–131. [Google Scholar] [CrossRef]

- Guzzetti, F.; Carrara, A.; Cardinali, M.; Reichenbach, P. Landslide hazard evaluation: A review of current techniques and their application in a multi-scale study, Central Italy. Geomorphology 1999, 31, 181–216. [Google Scholar] [CrossRef]

- Guzzetti, F.; Mondini, A.C.; Cardinali, M.; Fiorucci, F.; Santangelo, M.; Chang, K.T. Landslide inventory maps: New tools for an old problem. Earth-Sci. Rev. 2012, 112, 42–66. [Google Scholar] [CrossRef] [Green Version]

- Brunsden, D. Landslide types, mechanisms, recognition, identification. In Landslides in the South Wales Coalfield: Proceedings, Symposium, Polytechnic of Wales—1st to 3rd April, 1985; Morgan, C.S., Ed.; Polytechnic of Wales: Cardiff, UK, 1985; pp. 19–28. [Google Scholar]

- Crosta, G.B.; Frattini, P.; Agliardi, F. Deep seated gravitational slope deformations in the European Alps. Tectonophysics 2013, 605, 13–33. [Google Scholar] [CrossRef]

- Roback, K.; Clark, M.K.; West, A.J.; Zekkos, D.; Li, G.; Gallen, S.F.; Chamlagain, D.; Godt, J.W. The size, distribution, and mobility of landslides caused by the 2015 Mw7.8 Gorkha earthquake, Nepal. Geomorphology 2018, 301, 121–138. [Google Scholar] [CrossRef]

- Sauchyn, D.J.; Trench, N.R. Landsat applied to landslide mapping. Photogramm. Eng. Remote Sens. 1978, 44, 735–741. [Google Scholar]

- Leshchinsky, B.A.; Olsen, M.J.; Tanyu, B.F. Contour Connection Method for automated identification and classification of landslide deposits. Comput. Geosci. 2015, 74, 27–38. [Google Scholar] [CrossRef]

- Derron, M.H.; Jaboyedoff, M. LIDAR and DEM techniques for landslides monitoring and characterization. Nat. Hazards Earth Syst. Sci. 2010, 10, 1877–1879. [Google Scholar] [CrossRef]

- Jaboyedoff, M.; Oppikofer, T.; Abellán, A.; Derron, M.H.; Loye, A.; Metzger, R.; Pedrazzini, A. Use of LIDAR in landslide investigations: A review. Nat. Hazards 2012, 61, 5–28. [Google Scholar] [CrossRef] [Green Version]

- Stumpf, A.; Kerle, N. Object-oriented mapping of landslides using Random Forests. Remote Sens. Environ. 2011, 115, 2564–2577. [Google Scholar] [CrossRef]

- Molch, K.; Unterstein, R. DLR—Earth Observation Center—60 Petabytes for the German Satellite Data Archive D-SDA. 2018. Available online: https://www.dlr.de/eoc/en/desktopdefault.aspx/tabid-12632/22039_read-51751 (accessed on 17 December 2018).

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Huang, F.; Yin, K.; He, T.; Zhou, C.; Zhang, J. Influencing factor analysis and displacement prediction in reservoir landslides—A case study of Three Gorges Reservoir (China). Tehnički Vjesnik 2016, 23, 617–626. [Google Scholar] [CrossRef] [Green Version]

- Varnes, D. Landslide hazard zonation: A review of principles and practice. Nat. Hazards 1984, 3, 61. [Google Scholar] [CrossRef]

- Micheletti, N.; Foresti, L.; Robert, S.; Leuenberger, M.; Pedrazzini, A.; Jaboyedoff, M.; Kanevski, M. Machine Learning Feature Selection Methods for Landslide Susceptibility Mapping. Math. Geosci. 2014, 46, 33–57. [Google Scholar] [CrossRef] [Green Version]

- Reichenbach, P.; Rossi, M.; Malamud, B.D.; Mihir, M.; Guzzetti, F. A review of statistically-based landslide susceptibility models. Earth-Sci. Rev. 2018, 180, 60–91. [Google Scholar] [CrossRef]

- Agliardi, F.; Crosta, G.; Zanchi, A. Structural constraints on deep-seated slope deformation kinematics. Eng. Geol. 2001, 59, 83–102. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Blaschke, T.; Gholamnia, K.; Meena, S.R.; Tiede, D.; Aryal, J. Evaluation of different machine learning methods and deep-learning convolutional neural networks for landslide detection. Remote Sens. 2019, 11, 196. [Google Scholar] [CrossRef] [Green Version]

- Moosavi, V.; Talebi, A.; Shirmohammadi, B. Producing a landslide inventory map using pixel-based and object-oriented approaches optimized by Taguchi method. Geomorphology 2014, 204, 646–656. [Google Scholar] [CrossRef]

- Mondini, A.C.; Guzzetti, F.; Reichenbach, P.; Rossi, M.; Cardinali, M.; Ardizzone, F. Semi-automatic recognition and mapping of rainfall induced shallow landslides using optical satellite images. Remote Sens. Environ. 2011, 115, 1743–1757. [Google Scholar] [CrossRef]

- Tanyas, H.; Rossi, M.; Alvioli, M.; van Westen, C.J.; Marchesini, I. A global slope unit-based method for the near real-time prediction of earthquake-induced landslides. Geomorphology 2019, 327, 126–146. [Google Scholar] [CrossRef]

- Alvioli, M.; Marchesini, I.; Reichenbach, P.; Rossi, M.; Ardizzone, F.; Fiorucci, F.; Guzzetti, F. Automatic delineation of geomorphological slope units with r.slopeunits v1.0 and their optimization for landslide susceptibility modeling. Geosci. Model Dev. 2016, 9, 3975–3991. [Google Scholar] [CrossRef] [Green Version]

- Huang, Y.; Zhao, L. Review on landslide susceptibility mapping using support vector machines. Catena 2018, 165, 520–529. [Google Scholar] [CrossRef]

- Goetz, J.N.; Cabrera, R.; Brenning, A.; Heiss, G.; Leopold, P. Modelling Landslide Susceptibility for a Large Geographical Area Using Weights of Evidence in Lower Austria. In Engineering Geology for Society and Territory; Springer International Publishing: Cham, Switzerland, 2015; Volume 2, pp. 927–930. [Google Scholar] [CrossRef]

- Martha, T.R.; Kerle, N.; Van Westen, C.J.; Jetten, V.; Kumar, K.V. Segment optimization and data-driven thresholding for knowledge-based landslide detection by object-based image analysis. IEEE Trans. Geosci. Remote Sens. 2011, 49, 4928–4943. [Google Scholar] [CrossRef]

- Calvello, M.; Peduto, D.; Arena, L. Combined use of statistical and DInSAR data analyses to define the state of activity of slow-moving landslides. Landslides 2017, 14, 473–489. [Google Scholar] [CrossRef]

- Keyport, R.N.; Oommen, T.; Martha, T.R.; Sajinkumar, K.S.; Gierke, J.S. A comparative analysis of pixel- and object-based detection of landslides from very high-resolution images. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 1–11. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, Y.; Ouyang, C.; Zhang, F.; Ma, J. Automated landslides detection for mountain cities using multi-temporal remote sensing imagery. Sensors 2018, 18, 821. [Google Scholar] [CrossRef] [Green Version]

- Sameen, M.I.; Pradhan, B. Landslide Detection Using Residual Networks and the Fusion of Spectral and Topographic Information. IEEE Access 2019, 7, 114363–114373. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems (NIPS’12), Lake Tahoe, NV, USA, 3–6 December 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2323. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. Lect. Notes Comput. Sci. 2015, 9351, 234–241. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Bickel, V.T.; Lanaras, C.; Manconi, A.; Loew, S.; Mall, U. Automated detection of lunar rockfalls using a Faster Region-based Convolutional Neural Network. In Proceedings of the 2018 AGU Fall Meeting, Washington, DC, USA, 10–14 December 2018. [Google Scholar]

- Anantrasirichai, N.; Biggs, J.; Albino, F.; Hill, P.; Bull, D. Application of Machine Learning to Classification of Volcanic Deformation in Routinely Generated InSAR Data. J. Geophys. Res. Solid Earth 2018, 123, 6592–6606. [Google Scholar] [CrossRef] [Green Version]

- Kalsnes, B.; Nadim, F. SafeLand: Changing pattern of landslides risk and strategies for its management. In Landslides: Global Risk Preparedness; Springer: Berlin/Heidelberg, Germany, 2013; pp. 95–114. [Google Scholar] [CrossRef]

- European Commission. Big-Data Earth Observation Technology and Tools Enhancing Research and Development; BETTER Project|H2020|CORDIS; European Commission: Brussels, Belgium, 2017. [Google Scholar]

- Calvello, M.; Cascini, L.; Mastroianni, S. Landslide zoning over large areas from a sample inventory by means of scale-dependent terrain units. Geomorphology 2013, 182, 33–48. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hay, G.J.; Castilla, G. Geographic Object-Based Image Analysis (GEOBIA): A new name for a new discipline. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 75–89. [Google Scholar] [CrossRef]

- Bickel, V.T.; Manconi, A.; Amann, F. Quantitative assessment of digital image correlation methods to detect and monitor surface displacements of large slope instabilities. Remote Sens. 2018, 10, 865. [Google Scholar] [CrossRef] [Green Version]

- Stumpf, A.; Malet, J.P.; Delacourt, C. Correlation of satellite image time-series for the detection and monitoring of slow-moving landslides. Remote Sens. Environ. 2017, 189, 40–55. [Google Scholar] [CrossRef]

- Lu, P.; Qin, Y.; Li, Z.; Mondini, A.C.; Casagli, N. Landslide mapping from multi-sensor data through improved change detection-based Markov random field. Remote Sens. Environ. 2019, 231. [Google Scholar] [CrossRef]

- Baatz, M.; Schäpe, A. Multiresolution Segmentation: An optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informations-Verarbeitung, XII; Wichmann Verlag: Berlin, Germany, 2000; pp. 12–23. [Google Scholar]

- Alvioli, M.; Mondini, A.C.; Fiorucci, F.; Cardinali, M.; Marchesini, I. Topography-driven satellite imagery analysis for landslide mapping. Geomat. Nat. Hazards Risk 2018, 9, 544–567. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Fang, Z.; Hong, H. Comparison of convolutional neural networks for landslide susceptibility mapping in Yanshan County, China. Sci. Total. Environ. 2019, 666, 975–993. [Google Scholar] [CrossRef]

- GDAL/OGR contributors. GDAL/OGR Geospatial Data Abstraction Software Library; Open Source Geospatial Foundation: Chicago, IL, USA, 2019. [Google Scholar]

- Conrad, O.; Bechtel, B.; Bock, M.; Dietrich, H.; Fischer, E.; Gerlitz, L.; Wehberg, J.; Wichmann, V.; Böhner, J. System for Automated Geoscientific Analyses (SAGA) v. 2.1.4. Geosci. Model Dev. 2015, 8, 1991–2007. [Google Scholar] [CrossRef] [Green Version]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016, arXiv:1603.04467. [Google Scholar]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 30 November 2019).

- He, H.; Garcia, E.A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels; EPFL Scientific Publications: Lausanne, Switzerland, 2010; p. 15. [Google Scholar]

- Ludwig, A. Automatisierte Erkennung von Tiefgreifenden Gravitativen Hangdeformationen Mittels Geomorphometrischer Analyse von Digitalen Geländemodellen. Ph.D. Thesis, University of Zurich, Zurich, Switzerland, 2017. [Google Scholar]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef] [Green Version]

- Schuegraf, P.; Bittner, K. Automatic Building Footprint Extraction from Multi-Resolution Remote Sensing Images Using a Hybrid FCN. ISPRS Int. J. Geo-Inf. 2019, 8, 191. [Google Scholar] [CrossRef] [Green Version]

- Drozdzal, M.; Vorontsov, E.; Chartrand, G.; Kadoury, S.; Pal, C. The Importance of Skip Connections in Biomedical Image Segmentation. In Deep Learning and Data Labeling for Medical Applications; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.A. V-Net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 4th International Conference on 3D Vision (3DV 2016), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar] [CrossRef] [Green Version]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2017; Volume 10553 LNCS, pp. 240–248. [Google Scholar] [CrossRef] [Green Version]

- Salehi, S.S.M.; Erdogmus, D.; Gholipour, A. Tversky loss function for image segmentation using 3D fully convolutional deep networks. In International Workshop on Machine Learning in Medical Imaging; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Wang, P.; Chung, A.C. Focal dice loss and image dilation for brain tumor segmentation. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2018; Volume 11045 LNCS, pp. 119–127. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Sharifi-Mood, M.; Olsen, M.J.; Gillins, D.T.; Mahalingam, R. Performance-based, seismically-induced landslide hazard mapping of Western Oregon. Soil Dyn. Earthq. Eng. 2017, 103, 38–54. [Google Scholar] [CrossRef]

- Burns, W.J.; Watzig, R.J. Statewide Landslide Information Database for Oregon; Technical Report; Oregon Department of Geology and Mineral Industries: Portland, OR, USA, 2014.

- Burns, W.; Herinckx, H.; Lindsey, K. Landslide Inventory of Portions of Northwest Douglas County, Oregon: Department of Geology and Mineral Industries, Open-File Report O-17-04, Esri Geodatabase, 4 Map pl., Scale 1:20,000 of Portions of Northwest Douglas County, Oregon; Technical Report; Oregon Department of Geology and Mineral Industries: Portland, OR, USA, 2017.

- Burns, W.J.; Madin, I.P. Protocol for Inventory Mapping of Landslide Deposits from Light Detection and RangIng (Lidar) Imagery; Oregon Department of Geology and Mineral Industries: Portland, OR, USA, 2009; 30p.

- Chicco, D. Ten quick tips for machine learning in computational biology. BioData Min. 2017, 10, 35. [Google Scholar] [CrossRef]

- Brunetti, M.; Melillo, M.; Peruccacci, S.; Ciabatta, L.; Brocca, L. How far are we from the use of satellite rainfall products in landslide forecasting? Remote Sens. Environ. 2018, 210, 65–75. [Google Scholar] [CrossRef]

- Christ, P.F.; Elshaer, M.E.A.; Ettlinger, F.; Tatavarty, S.; Bickel, M.; Bilic, P.; Rempfler, M.; Armbruster, M.; Hofmann, F.; D’Anastasi, M.; et al. Automatic Liver and Lesion Segmentation in CT Using Cascaded Fully Convolutional Neural Networks and 3D Conditional Random Fields. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016. [Google Scholar]

| High Resolution DEM | Satellite Images | Geology | Other Sources | |

|---|---|---|---|---|

| Morphological Features | Hydrological Features | Land Cover Features | Geological Features | |

| Slope | Drainage Network | Land Use/Land Cover | Lithology | Rainfall Intensity |

| Elevation | Proximity to Rivers | GLCM Texture Features | Distance to Faults | |

| Aspect | Wetness Index | NDVI | Geo-structural | |

| Curvature | Flow Direction | Distance from Road Network | ||

| Study | ML Methods | Main Objective | Algorithms Used |

|---|---|---|---|

| Mondini et al. [31] | Pixel-Based | Mapping of Rainfall Induced Landslides | Change detection; LR; LDA; QDA |

| Martha et al. [36] | OBIA | Landslide Detection | Multi-Level Thresholding; k-Means Clustering |

| Stumpf and Kerle [21] | OBIA | Landslide Mapping | RF with an iterative scheme to compensate class imbalance |

| Moosavi et al. [30] | Pixel-Based and OBIA | Landslide Inventory Generation | Taguchi method to optimize ANN and SVM |

| Micheletti et al. [26] | Pixel-Based | Feature Selection; Landslide Susceptibility Mapping | SVM; RF; AdaBoost |

| Goetz et al. [35] | Pixel-Based | Landslide Susceptibility Modeling | Weight of Evidence Model; Generalized additive model; SVM; Penalized LDA with bundling; RF |

| Alvioli et al. [33] | OBIA | Automatic Delineation of Geomorphological Slope Units; Landslide Susceptibility Modeling | LR |

| Calvello et al. [37] | OBIA | Activity Mapping | Statistical multivariate |

| Keyport et al. [38] | Pixel-Based and OBIA | Comparative Analysis of Pixel-Based and OBIA Classification | K-means clustering; Elimination of false positive using object properties |

| Tanyas et al. [32] | OBIA | Rapid Prediction of Earthquake-Induced Landslides | LAND-slide Susceptibility Evaluation software which uses LR |

| Chen et al. [39] | Deep-learning | Identification of landslides using change detection | CNN for change detection; post-processing with STCA |

| Ghorbanzadeh et al. [29] | Deep-learning | Comparison of deep-learning methods | CNN, SVM, ANN, RF |

| Sameen and Pradhan [40] | Deep-learning | Landslide detection | CNN; spectral and topographic feature fusion |

| Source Data | Derived Features | Pixel-Based | Object-Based | Deep-Learning |

|---|---|---|---|---|

| Digital Terrain Model (from LiDAR) | Hillshade | √ | ||

| Slope | √ | √ | √ | |

| Aspect (split into Northness and Eastness) | √ | √ | ||

| Roughness | √ | √ | √ | |

| Curvature | √ | |||

| Valley Depth | √ | √ | ||

| Distance from River Channels | √ | √ | ||

| Wetness Index (as Binary Mask with a threshold) | √ | √ | ||

| Optical Image (Sentinel-2) | NDVI | √ | √ | √ |

| Band Brightness | √ | √ | √ | |

| GLCM Texture | √ |

| Method | ML Algorithm | Accuracy | F1 Score | MCC |

|---|---|---|---|---|

| Pixel-Based | RF | 83.16% | 0.513 | 0.433 * |

| ANN | 82.96% | 0.502 | 0.42 | |

| LR | 73.00% | 0.383 | 0.276 | |

| Object-Based | ANN | 86.19% | 0.546 | 0.472 * |

| LR | 80.26% | 0.507 | 0.44 | |

| RF | 81.69% | 0.508 | 0.434 | |

| Deep-Learning | U-Net + ResNet34 | 85.02% | 0.562 | 0.495 * |

| Method | POD | POFD | (POD-POFD) |

|---|---|---|---|

| Pixel-Based (RF) | 0.66 | 0.14 | 0.52 |

| Object-Based (ANN) | 0.48 | 0.06 | 0.42 |

| Deep-Learning | 0.72 | 0.13 | 0.59 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prakash, N.; Manconi, A.; Loew, S. Mapping Landslides on EO Data: Performance of Deep Learning Models vs. Traditional Machine Learning Models. Remote Sens. 2020, 12, 346. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12030346

Prakash N, Manconi A, Loew S. Mapping Landslides on EO Data: Performance of Deep Learning Models vs. Traditional Machine Learning Models. Remote Sensing. 2020; 12(3):346. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12030346

Chicago/Turabian StylePrakash, Nikhil, Andrea Manconi, and Simon Loew. 2020. "Mapping Landslides on EO Data: Performance of Deep Learning Models vs. Traditional Machine Learning Models" Remote Sensing 12, no. 3: 346. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12030346