Detection, Segmentation, and Model Fitting of Individual Tree Stems from Airborne Laser Scanning of Forests Using Deep Learning

Abstract

:1. Introduction

1.1. Related Work

1.2. Contributions of This Work

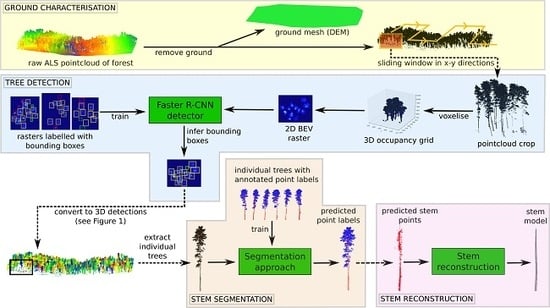

- A tree detection approach proposed in previous work [21] is extended by adding a new representations for the encoding of 3D pointcloud data into 2D rasterised summaries for detection. Evaluations are carried out with multiple aerial datasets.

- A stem segmentation approach proposed in previous work [21] is extended by incorporating voxel representations that include LiDAR return intensity into the learning representation, and we develop a new point-based deep learning architecture (based on Pointnet [24]), for tree pointcloud segmentation. Evaluations are carried out with multiple aerial datasets and different segmentation architectures are compared.

- We develop a new stem reconstruction technique using RANdom SAmple Concensus (RANSAC) and non-linear least squares that fits a flexible geometric model of a tree’s main stem to segmented stem points to compute the tree centreline position and stem radius at multiple points along the length of the stem. This model can then be used to extract inventory metrics such as height, diameters etc.

2. Materials

2.1. Study Areas

2.2. Data Collection

3. Methods

3.1. Overview

3.2. Ground Characterisation and Removal

3.3. Individual Tree Detection

3.3.1. Individual Tree Detection: BEV Representations

3.3.2. Individual Tree Detection: Training

3.3.3. Individual Tree Detection: Inference

3.4. Stem Segmentation

3.4.1. Stem Segmentation: 3D-FCN Architecture for Voxel Segmentation

3.4.2. Stem Segmentation: Pointnet Architecture for Point Segmentation

3.5. Stem Reconstruction/Model Fitting

4. Experimental Setup

4.1. Metrics

5. Results

5.1. Ground Characterisation and Removal

5.2. Individual Tree Detection

5.3. Stem Segmentation

5.4. Stem Reconstruction/Model Fitting

6. Discussion

6.1. Individual Tree Detection

6.2. Stem Segmentation

6.3. Stem Reconstruction/Model Fitting

6.4. Scalability and Generalisation

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Weiskittel, A.; Hann, D.; Vanclayy, J.K.J. Forest Growth and Yield Modeling; Wiley-Blackwell: Oxford, UK, 2011. [Google Scholar]

- West, P.W. Tree and Forest Measurement, 3rd ed.; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Högström, T.; Wernersson, Å. On Segmentation, Shape Estimation and Navigation Using 3D Laser Range Measurements of Forest Scenes. IFAC Proc. Vol. 1998, 31, 423–428. [Google Scholar] [CrossRef]

- Forsman, P.; Halme, A. 3-D mapping of natural environments with trees by means of mobile perception. IEEE Trans. Robot. 2005, 21, 482–490. [Google Scholar] [CrossRef]

- Bienert, A.; Scheller, S.; Keane, E.; Mohan, F.; Nugent, C. Tree detection and diameter estimations by analysis of forest terrestrial laserscanner point clouds. In Proceedings of the ISPRS Workshop on Laser Scanning 2007 and SilviLaser 2007, Espoo, Finland, 12–14 September 2007; pp. 50–55. [Google Scholar]

- Othmani, A.; Piboule, A.; Krebs, M.; Stolz, C. Towards automated and operational forest inventories with T-Lidar. In Proceedings of the 11th International Conference on LiDAR Applications for Assessing Forest Ecosystems (SilviLaser 2011), Hobart, Australia, 16–20 October 2011; pp. 1–9. [Google Scholar]

- Liang, X.; Litkey, P.; Hyyppä, J.; Kaartinen, H.; Vastaranta, M.; Holopainen, M. Automatic stem mapping using single-scan terrestrial laser scanning. IEEE Trans. Geosci. Remote Sens. 2012, 50, 661–670. [Google Scholar] [CrossRef]

- Pueschel, P.; Newnham, G.; Rock, G.; Udelhoven, T.; Werner, W.; Hill, J. The influence of scan mode and circle fitting on tree stem detection, stem diameter and volume extraction from terrestrial laser scans. ISPRS J. Photogramm. Remote Sens. 2013, 77, 44–56. [Google Scholar] [CrossRef]

- Olofsson, K.; Holmgren, J.; Olsson, H. Tree stem and height measurements using terrestrial laser scanning and the RANSAC algorithm. Remote Sens. 2014, 6, 4323–4344. [Google Scholar] [CrossRef] [Green Version]

- Liang, X.; Kankare, V.; Yu, X.; Hyyppä, J.; Holopainen, M. Automated stem curve measurement using terrestrial laser scanning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1739–1748. [Google Scholar] [CrossRef]

- Olofsson, K.; Holmgren, J. Single tree stem profile detection using terrestrial laser scanner data, flatness saliency features and curvature properties. Forests 2016, 7, 207. [Google Scholar] [CrossRef]

- Heinzel, J.; Huber, M.O. Detecting tree stems from volumetric TLS data in forest environments with rich understory. Remote Sens. 2017, 9, 9. [Google Scholar] [CrossRef] [Green Version]

- Xi, Z.; Hopkinson, C.; Chasmer, L. Filtering Stems and Branches from Terrestrial Laser Scanning Point Clouds Using Deep 3-D Fully Convolutional Networks. Remote Sens. 2018, 10, 1215. [Google Scholar] [CrossRef] [Green Version]

- Hyyppä, J.; Kelle, O.; Lehikoinen, M.; Inkinen, M. A segmentation-based method to retrieve stem volume estimates from 3-D tree height models produced by laser scanners. IEEE Trans. Geosci. Remote Sens. 2001, 39, 969–975. [Google Scholar] [CrossRef]

- Persson, A.; Holmgren, J.; Soderman, U. Detecting and measuring individual trees using an airborne laser scanner. Photogramm. Eng. Remote Sens. 2002, 68, 925–932. [Google Scholar]

- Maltamo, M.; Peuhkurinen, J.; Malinen, J.; Vauhkonen, J.; Packalén, P.; Tokola, T. Predicting tree attributes and quality characteristics of scots pine using airborne laser scanning data. Silva Fennica 2009, 43, 507–521. [Google Scholar] [CrossRef] [Green Version]

- Vauhkonen, J.; Korpela, I.; Maltamo, M.; Tokola, T. Imputation of single-tree attributes using airborne laser scanning-based height, intensity, and alpha shape metrics. Remote Sens. Environ. 2010, 114, 1263–1276. [Google Scholar] [CrossRef]

- Næsset, E. Predicting forest stand characteristics with airborne scanning laser using a practical two-stage procedure and field data. Remote Sens. Environ. 2002, 80, 88–99. [Google Scholar] [CrossRef]

- Gobakken, T.; Næsset, E. Estimation of diameter and basal area distributions in coniferous forest by means of airborne laser scanner data. Scand. J. For. Res. 2004, 19, 529–542. [Google Scholar] [CrossRef]

- Maltamo, M.; Suvanto, A.; Packalén, P. Comparison of basal area and stem frequency diameter distribution modelling using airborne laser scanner data and calibration estimation. For. Ecol. Manag. 2007, 247, 26–34. [Google Scholar] [CrossRef]

- Windrim, L.; Bryson, M. Forest Tree Detection and Segmentation using High Resolution Airborne LiDAR. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 4–8 November 2019; pp. 3898–3904. [Google Scholar]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 922–928. [Google Scholar] [CrossRef]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D ShapeNets: A deep representation for volumetric shapes. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar] [CrossRef] [Green Version]

- Cherabier, I.; Hane, C.; Oswald, M.R.; Pollefeys, M. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Cvpr 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 601–610. [Google Scholar] [CrossRef]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. In Proceedings of the IEEE Cvpr 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Beltran, J.; Guindel, C.; Moreno, F.M.; Cruzado, D.; Garc, F.; Escalera, A.D. BirdNet: A 3D Object Detection Framework from LiDAR information. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018. [Google Scholar]

- Caltagirone, L.; Scheidegger, S.; Svensson, L.; Wahde, M. Fast LIDAR-based road detection using fully convolutional neural networks. In Proceedings of the IEEE Intelligent Vehicles Symposium, Redondo Beach, CA, USA, 11–14 June 2017; pp. 1019–1024. [Google Scholar] [CrossRef] [Green Version]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D Object Detection from RGB-D Data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef] [Green Version]

- Xu, Q.; Maltamo, M.; Tokola, T.; Hou, Z.; Li, B. Predicting tree diameter using allometry described by non-parametric locally-estimated copulas from tree dimensions derived from airborne laser scanning. For. Ecol. Manag. 2018, 434, 205–212. [Google Scholar] [CrossRef]

- Vastaranta, M.; Saarinen, N.; Kankare, V.; Holopainen, M.; Kaartinen, H.; Hyyppä, J.; Hyyppä, H. Multisource single-tree inventory in the prediction of tree quality variables and logging recoveries. Remote Sens. 2014, 6, 3475–3491. [Google Scholar] [CrossRef] [Green Version]

- Kankare, V.; Liang, X.; Vastaranta, M.; Yu, X.; Holopainen, M.; Hyyppä, J. Diameter distribution estimation with laser scanning based multisource single tree inventory. ISPRS J. Photogramm. Remote Sens. 2015, 108, 161–171. [Google Scholar] [CrossRef]

- Chen, Q.; Baldocchi, D.; Gong, P.; Kelly, M. Isolating Individual Trees in a Savanna Woodland Using Small Footprint Lidar Data. Photogramm. Eng. Remote Sens. 2006, 72, 923–932. [Google Scholar] [CrossRef] [Green Version]

- Ene, L.; Næsset, E.; Gobakken, T. Single tree detection in heterogeneous boreal forests using airborne laser scanning and area-based stem number estimates. Int. J. Remote Sens. 2012, 33, 5171–5193. [Google Scholar] [CrossRef]

- Dalponte, M.; Ørka, H.O.; Ene, L.T.; Gobakken, T.; Næsset, E. Tree crown delineation and tree species classification in boreal forests using hyperspectral and ALS data. Remote Sens. Environ. 2014, 140, 306–317. [Google Scholar] [CrossRef]

- Zhen, Z.; Quackenbush, L.J.; Zhang, L. Impact of tree-oriented growth order in marker-controlled region growing for individual tree crown delineation using airborne laser scanner (ALS) data. Remote Sens. 2013, 6, 555–579. [Google Scholar] [CrossRef] [Green Version]

- Smits, I.; Prieditis, G.; Dagis, S.; Dubrovskis, D. Individual tree identification using different LIDAR and optical imagery data processing methods. Biosyst. Inf. Technol. 2012, 1, 19–24. [Google Scholar] [CrossRef]

- Gupta, S.; Koch, B.; Weinacker, H. Tree Species Detection Using Full Waveform Lidar Data In A Complex Forest. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, XXXVIII, 249–254. [Google Scholar]

- Wang, Y.; Weinacker, H.; Koch, B.; Stere, K.; Stereńczak, K. Lidar Point Cloud Based Fully Automatic 3D Singl Tree Modelling in Forest and Evaluations of the Procedure. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, XXXVII, 45–52. [Google Scholar] [CrossRef]

- Dong, T.; Zhang, X.; Ding, Z.; Fan, J. Multi-layered tree crown extraction from LiDAR data using graph-based segmentation. Comput. Electron. Agric. 2020, 170, 105213. [Google Scholar] [CrossRef]

- Chen, W.; Hu, X.; Chen, W.; Hong, Y.; Yang, M. Airborne LiDAR Remote Sensing for Individual Tree Forest Inventory Using Trunk Detection-Aided Mean Shift Clustering Techniques. Remote Sens. 2018, 10, 1078. [Google Scholar] [CrossRef] [Green Version]

- Kaartinen, H.; Hyyppä, J.; Yu, X.; Vastaranta, M.; Hyyppä, H.; Kukko, A.; Holopainen, M.; Heipke, C.; Hirschmugl, M.; Morsdorf, F.; et al. An international comparison of individual tree detection and extraction using airborne laser scanning. Remote Sens. 2012, 4, 950–974. [Google Scholar] [CrossRef] [Green Version]

- Solberg, S.; Naesset, E.; Bollandsas, O.M. Single tree segmentation using airborne laser scanner data in a structurally heterogeneous spruce forest. Photogramm. Eng. Remote Sens. 2006, 72, 1369–1378. [Google Scholar] [CrossRef]

- Pitkänen, J.; Maltamo, M. Adaptive Methods for Individual Tree Detection on Airborne Laser Based Canopy Height Model. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 36, 187–191. [Google Scholar]

- Luostari, T.; Lahivaara, T.; Packalen, P.; Seppanen, A. Bayesian approach to single-tree detection in airborne laser scanning—Use of training data for prior and likelihood modeling. J. Phys. Conf. Ser. 2018, 1047, 012008. [Google Scholar] [CrossRef]

- Amiri, N.; Polewski, P.; Heurich, M.; Krzystek, P.; Skidmore, A.K. Adaptive stopping criterion for top-down segmentation of ALS point clouds in temperate coniferous forests. ISPRS J. Photogramm. Remote Sens. 2018, 141, 265–274. [Google Scholar] [CrossRef]

- Liang, X.; Kankare, V.; Hyyppä, J.; Wang, Y.; Kukko, A.; Haggrén, H.; Yu, X.; Kaartinen, H.; Jaakkola, A.; Guan, F.; et al. Terrestrial laser scanning in forest inventories. ISPRS J. Photogramm. Remote Sens. 2016, 115, 63–77. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. (TOG) 2019, 38, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, H.; Zhang, J.; Xu, F. Typical Target Detection in Satellite Images Based on Convolutional Neural Networks. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 2956–2961. [Google Scholar] [CrossRef]

- Zhang, L.; Shi, Z.; Wu, J. A Hierarchical Oil Tank Detector with Deep Surrounding Features for High-Resolution Optical Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4895–4909. [Google Scholar] [CrossRef]

- Windrim, L.; Ramakrishnan, R.; Melkumyan, A.; Murphy, R. Hyperspectral CNN Classification with Limited Training Samples. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 4–7 September 2017; pp. 2.1–2.12. [Google Scholar]

- Windrim, L.; Melkumyan, A.; Murphy, R.J.; Chlingaryan, A.; Ramakrishnan, R. Pretraining for Hyperspectral Convolutional Neural Network Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2798–2810. [Google Scholar] [CrossRef]

- Xiu, H.; Yan, W.; Vinayaraj, P.; Nakamura, R.; Kim, K.S. 3D semantic segmentation for high-resolution aerial survey derived point clouds using deep learning (demonstration). In Proceedings of the International Conference on Advances in Geographic Information Systems 2018, Washington, DC, USA, 1 November 2018; pp. 588–591. [Google Scholar] [CrossRef]

- Liu, Y.; Piramanayagam, S.; Monteiro, S.T.; Saber, E. Dense Semantic Labeling of Very-High-Resolution Aerial Imagery and LiDAR with Fully-Convolutional Neural Networks and Higher-Order CRFs. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1561–1570. [Google Scholar] [CrossRef]

- Pratikakis, I.; Dupont, F.; Ovsjanikov, M. Unstructured point cloud semantic labeling using deep segmentation networks. In Proceedings of the Eurographics Workshop on 3D Object Retrieval (2017), Lyon, France, 23–24 April 2017. [Google Scholar] [CrossRef]

- Guan, H.; Yu, Y.; Ji, Z.; Li, J.; Zhang, Q. Deep learning-based tree classification using mobile LiDAR data. Remote Sens. Lett. 2015, 6, 864–873. [Google Scholar] [CrossRef]

- Zou, X.; Cheng, M.; Wang, C.; Xia, Y.; Li, J. Tree Classification in Complex Forest Point Clouds Based on Deep Learning. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2360–2364. [Google Scholar] [CrossRef]

- Mizoguchi, T.; Ishii, A.; Nakamura, H.; Inoue, T.; Takamatsu, H. Lidar-based individual tree species classification using convolutional neural network. Videometrics Range Imaging Appl. XIV 2017, 10332, 103320O. [Google Scholar] [CrossRef]

- Hamraz, H.; Jacobs, N.B.; Contreras, M.A.; Clark, C.H. Deep learning for conifer/deciduous classification of airborne LiDAR 3D point clouds representing individual trees. ISPRS J. Photogramm. Remote Sens. 2018, 158, 219–230. [Google Scholar] [CrossRef] [Green Version]

- Ayrey, E.; Hayes, D.J. The Use of Three-Dimensional Convolutional Neural Networks to Interpret LiDAR for Forest Inventory. Remote Sens. 2018, 10, 649. [Google Scholar] [CrossRef] [Green Version]

- Bentley, J.L. Multidimensional binary search trees used for associative searching. Commun. ACM 1975, 18, 509–517. [Google Scholar] [CrossRef]

- Delaunay, B. Sur la sphere vide. J. Phys. Radium 1934, 7, 735–739. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Holmgren, J.; Lindberg, E. Tree crown segmentation based on a tree crown density model derived from Airborne Laser Scanning model derived from Airborne Laser Scanning. Remote Sens. Lett. 2019, 10, 1143–1152. [Google Scholar] [CrossRef]

- Lee, A.C.; Lucas, R.M. A LiDAR-derived canopy density model for tree stem and crown mapping in Australian forests. Remote Sens. Environ. 2007, 111, 493–518. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef] [Green Version]

- Girardeau-Montaut, D. Cloud Compare—3D Point Cloud and Mesh Processing Software. 2015. Available online: https://www.danielgm.net/cc/ (accessed on 6 May 2019).

- Milletari, F.; Navab, N.; Ahmadi, S.a. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the International Conference on 3DVision 2016, Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Masters, D.; Luschi, C. Revisiting Small Batch Training for Deep Neural Networks. arXiv 2018, arXiv:1804.07612. [Google Scholar]

- Bryson, M. PointcloudITD: A software package for individual tree detection and counting. In Deployment and Integration of Cost-Effective, High Spatial Resolution, Remotely Sensed Data for the Australian Forestry Industry; FWPA Technical Report; FWPA: Melbourne, Australia, 2017; pp. 1–19. Available online: https://www.fwpa.com.au/images/pointcloudITD_information.pdf (accessed on 6 May 2019).

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A New Method for Segmenting Individual Trees from the Lidar Point Cloud. Photogramm. Eng. Remote Sens. 2013, 78, 75–84. [Google Scholar] [CrossRef] [Green Version]

- Lalonde, J.; Vandapel, N.; Hebert, M. Automatic three-dimensional point cloud processing for forest inventory. Robot. Inst. 2006, 334. [Google Scholar] [CrossRef]

- Yao, T.; Yang, X.; Zhao, F.; Wang, Z.; Zhang, Q.; Jupp, D.; Lovell, J.; Culvenor, D.; Newnham, G.; Ni-Meister, W.; et al. Measuring forest structure and biomass in New England forest stands using Echidna ground-based lidar. Remote Sens. Environ. 2011, 115, 2965–2974. [Google Scholar] [CrossRef]

- Lindberg, E.; Holmgren, J.; Olofsson, K.; Olsson, H. Estimation of stem attributes using a combination of terrestrial and airborne laser scanning. Eur. J. For. Res. 2012, 131, 1917–1931. [Google Scholar] [CrossRef] [Green Version]

- Vauhkonen, J.; Ene, L.; Gupta, S.; Heinzel, J.; Holmgren, J.; Pitkänen, J.; Solberg, S.; Wang, Y.; Weinacker, H.; Hauglin, K.M.; et al. Comparative testing of single-tree detection algorithms under different types of forest. Forestry 2012, 85, 27–40. [Google Scholar] [CrossRef] [Green Version]

- Dalponte, M.; Reyes, F.; Kandare, K.; Gianelle, D. Delineation of individual tree crowns from ALS and hyperspectral data: A comparison among four methods. Eur. J. Remote Sens. 2015, 48, 365–382. [Google Scholar] [CrossRef] [Green Version]

| Dataset | Fold | Detection split | Segmentation split | ||||

|---|---|---|---|---|---|---|---|

| Train | Val | Test | Train | Val | Test | ||

| Tumut | 1 | 164 | 12 | 12 | 60 | 3 | 12 |

| 2 | 168 | 12 | 8 | 60 | 7 | 8 | |

| 3 | 165 | 12 | 11 | 60 | 4 | 11 | |

| Carabost | 1 | 233 | 17 | 9 | 66 | 6 | 9 |

| 2 | 216 | 26 | 17 | 60 | 4 | 17 | |

| 3 | 209 | 37 | 13 | 66 | 2 | 13 | |

| Dataset | Method | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|

| Tumut | CHM + watershed | ||||

| DBSCAN | |||||

| RCNN vd * | |||||

| RCNN vd/mh/mr * | |||||

| Carabost | CHM + watershed | ||||

| DBSCAN | |||||

| RCNN vd * | |||||

| RCNN vd/mh/mr * |

| Stem | Foliage | ||||||

|---|---|---|---|---|---|---|---|

| Dataset | Method | IoU | Precision | Recall | IoU | Precision | Recall |

| Tumut | Eigen features | ||||||

| Eigen features (r) | |||||||

| RANSAC | |||||||

| Voxel 3D-FCN * | |||||||

| Voxel 3D-FCN (r) * | |||||||

| Pointnet * | |||||||

| Pointnet (r) * | |||||||

| Carabost | Eigen features | ||||||

| Eigen features (r) | |||||||

| RANSAC | |||||||

| Voxel 3D-FCN * | |||||||

| Voxel 3D-FCN (r) * | |||||||

| Pointnet * | |||||||

| Pointnet (r) * | |||||||

| DBH Estimates from Manual Seg | DBH Estimates from Pipeline Seg | ||||

|---|---|---|---|---|---|

| DBH RMSE | DBH Max. Error | Avg. Perc. Error | DBH RMSE | DBH Max. Error | Avg. Perc. Error |

| 9.45 cm | 17.2 cm | 9.05% | 15.39 cm | 24.5 cm | 15.85% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Windrim, L.; Bryson, M. Detection, Segmentation, and Model Fitting of Individual Tree Stems from Airborne Laser Scanning of Forests Using Deep Learning. Remote Sens. 2020, 12, 1469. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12091469

Windrim L, Bryson M. Detection, Segmentation, and Model Fitting of Individual Tree Stems from Airborne Laser Scanning of Forests Using Deep Learning. Remote Sensing. 2020; 12(9):1469. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12091469

Chicago/Turabian StyleWindrim, Lloyd, and Mitch Bryson. 2020. "Detection, Segmentation, and Model Fitting of Individual Tree Stems from Airborne Laser Scanning of Forests Using Deep Learning" Remote Sensing 12, no. 9: 1469. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12091469