1. Introduction

Large-scale natural disasters, such as earthquakes, have produced a huge number of building damage over a wide area. In order to consider the effective emergency response and early-stage recovery planning, it is indispensable to identify the amount and distribution of the structural damage immediately after the disaster. Remote sensing has been recognized as a suitable source to provide timely data for the detection of building damage in large areas (e.g., [

1,

2]). Although visual interpretations have been applied to detect building damage from optical remote-sensing images [

3,

4,

5], a lot of time and human resources are required to identify the damage over a wide area. Gray level or texture-based change detection techniques between pre- and post-event images have been introduced in order to automatically or semi-automatically estimate damage distributions [

6,

7,

8,

9,

10,

11]. Such change-based approaches, however, cannot be applied when a pre-event image is not available in the area of interest. A robust methodology to automatically detect building damage only from post-disaster images needs to be developed for rapid and effective damage assessment.

Recently, deep-learning and artificial intelligence solutions have been dramatically developing in the various fields of science and engineering. Convolutional neural network (CNN), one of the deep-learning techniques, is now recognized as the most suitable approach for image recognition (e.g., [

12,

13]). CNN-based approaches have been applied to remote sensing images not only for scene and land cover classifications [

14,

15] but also for detecting building damage areas in earthquake disasters [

16,

17,

18,

19,

20,

21,

22,

23]. Duarte et al. [

16] demonstrated the CNN-based feature fusion technique for identifying a damaged region from remote sensing images. Nex et al. [

17] examined the transfer-learning technique for detecting the visible structural damage from satellites, airborne and UAV images. Ma et al. [

18,

19] accepted the CNN-based approaches for estimating the block-level damage distribution and extracting the damaged parts of buildings from satellite images, respectively. Cooner et al. [

20] and Ji et al. [

21,

22] applied the machine- or deep-learning techniques for creating building-by-building damage maps by including building footprints in the image analysis. Since building footprint data are available for most urban areas from open databases such as the OpenStreetMap service [

24], the use of building footprints becomes more feasible for easily delineating locations of individual buildings from an image.

These studies revealed that the CNN-based approaches have worked successfully in building damage detections by adjusting the CNN architectures suitably for their datasets. Most of the previous studies, however, classified the building damage into two classes; damaged and non-damaged or collapsed and non-collapsed. As pointed out in Ci et al. [

23], the identification of an intermediate damage level would be important for actual post-disaster activities including early-stage recovery planning and disaster waste management. Moreover, most of the previous studies used damage data visually interpreted from remote-sensing images as reference training data. As pointed out in the previous validation studies of visual interpretations [

3,

25], uncertainties and/or mis-classifications were contained not only in intermediate damage levels but also in no damage and severe damage levels. In order to develop a reliable damage detection technique, ground truth data obtained in field damage investigations need to be used as reference training data.

When building damage is identified from vertically observed remote sensing images, a building roof is an important factor for classifying the damage grade because the damage of building sides such as walls and columns, and the internal damage, cannot be directly observed from the images. Since a building roof is one of the most fragile parts in residential houses, residents immediately cover the building roofs with blue plastic tarps to temporally protect their houses from further damage, such as water leakage, when the roofs are damaged by natural disasters. Although it is difficult to determine the detailed damage grade of the buildings covered by blue tarps from remote-sensing images, the blue tarps on building roofs themselves can be recognized as a sign of damage. Such blue tarp-covered buildings, however, were rarely considered explicitly in remote-sensing-based damage detection studies except in limited cases [

26,

27].

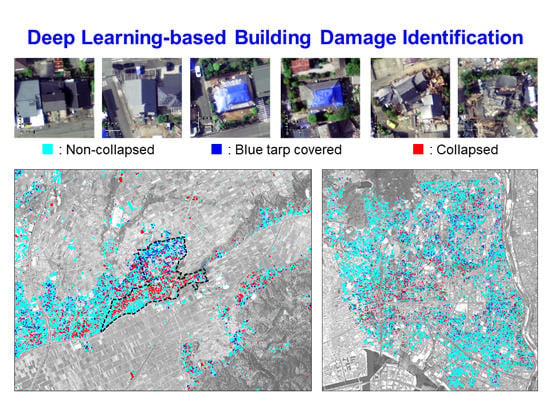

In this study, a CNN-based building damage detection technique was developed to automatically classify the damage grade into collapsed, non-collapsed and blue tarp-covered buildings by analyzing post-disaster aerial images and building damage inventories in the 2016 Kumamoto and the 1995 Kobe, Japan, earthquakes. The building damage inventories obtained in the field investigations by structural engineers were mainly used as reference ground truth data. Blue tarp-covered buildings were visually identified from the images, and the damage grades of the blue tarp buildings were discussed based on the damage inventories. The image patches were extracted from the post-disaster images with the help of the building footprints in the damage inventories. The CNN-based damage classification model was developed by the deep-learning of the damage grades of the buildings in the image patches. The applicability of the developed CNN architecture was discussed by estimating the building damage from the post-event aerial images observed in a town of the Chiba prefecture, Japan, damaged by the typhoon in September 2019.

4. CNN-Based Building Damage Estimations

The developed CNN model was applied to the whole building data.

Figure 9a,b show the distributions of the estimated building damage for the Mashiki town and Nishinomiya city, respectively. Compared with the damage inventories shown in

Figure 3a,b, the D5–D6/major damage buildings were mostly detected as the collapsed buildings by the CNN model. However, the distribution of the collapsed buildings seems to be overestimated since the D0–D1/negligible damage buildings were falsely classified to the collapsed buildings. The blue tarp-covered buildings were distributed mainly between the collapsed and non-collapsed building areas. A similar trend was also confirmed in the damage inventory because the D2–D4, moderate and heavy damage buildings, were located around the D5–D6/major damage buildings in the damage inventories.

Table 7 shows the summaries of the CNN-based classifications. The numbers of the cells indicate the number of buildings classified to each class by the CNN model. For the accuracy assessment, the colors of the cells were classified into three categories; underestimated (light blue), correctly estimated (light green) and overestimated (pink) as shown in the table. The precisions and recalls were calculated for each damage class. The precisions for the non-collapsed and collapsed buildings were higher than 75% in the AIJ- and V-data. Whereas the recalls for the non-collapsed buildings were higher than 70% in the AIJ- and V-data, the recalls for the collapsed buildings were lower than 40% in the three inventories, indicating that the overestimations were found in the collapsed building classification. Although the blue tarp-covered buildings do not perfectly correspond to moderate or heavy damage, the precision and recall were indicated with brackets. The recalls for the blue tarp buildings were higher than 65%, but the precisions were lower than 30 %. This is because not all of the intermediately damaged buildings were covered with blue tarps on the roof, whereas the blue tarp buildings were typically assigned to the intermediate damage level.

The spatial distribution of the difference between the estimations and the inventories is visualized in

Figure 10a,b. The colors of the polygons indicate the results of the accuracy assessment classified in

Table 7. For the 2016 Kumamoto earthquake shown in

Figure 10a, the correctly estimated buildings were dominantly distributed in the area. It indicates that the building damage was accurately estimated by the proposed model. For the 1995 Kobe earthquake, shown in

Figure 10b, on the other hand, not only the correctly estimated buildings but also the underestimated buildings were widely distributed. This is mainly because the numbers of the moderately damaged buildings were classified as non-collapsed as shown in

Table 7. As indicated in

Figure 2, moderate damage in the BRI-data included slight damage levels, such as hair-line cracks in walls. Since it is very difficult to detect such slight damage from the aerial images, these buildings were underestimated in the proposed model. Considering the limitation of the remote-sensing-based damage detection, these underestimations would be acceptable for a quick damage assessment.

The causes of other serious false classifications are discussed by carefully checking the images and the building damage data.

Figure 11a shows examples of D5 buildings in the AIJ-data falsely classified to non-collapse by the CNN model. The buildings were assigned to collapse in the inventory because soft-story collapse was confirmed in the field investigation. In the aerial images, however, failures were not found on the building roofs. When we carefully see the images, we can find rubbles of columns and walls distributing around the buildings. It is difficult to detect such soft-story collapse of buildings without failure of roofs from vertical images even by visual interpretation since the building roofs seem to be intact in the images. Therefore, the damage grades were underestimated by the CNN model. On the other hand,

Figure 11b,c shows examples of D0–D1 buildings falsely classified as collapsed by the CNN model. We can see that the roofs of the buildings in

Figure 11b are seriously damaged. Considering the criteria of the damage grades in

Table 1 and

Figure 2, the damage level for the buildings should be classified to D3 or higher. This suggests that the damage was probably falsely labeled in the field investigation due to missing the damaged parts. Due to the increase in solar energy generation in residential houses in Japan, solar panels have been installed on the roofs of many buildings.

Figure 11c shows the examples of the building roofs with solar panels that were not seriously damaged by the earthquake and assigned to D0 or D1 in the damage inventory. The damage of the buildings, however, was classified to collapse by the CNN model. As shown in

Figure 11c, the color and contrast of the solar panels were totally different from those of the roof materials. The damage of such buildings was overestimated as collapsed, probably because the solar panels were falsely interpreted as damage in the CNN model. These results show the limitation of the proposed damage-identification method especially for buildings subjected to soft-story collapse and buildings with solar panels of the roofs. Although it would be difficult to accurately detect soft-story collapse from the remote-sensing images, the overestimation caused by the presence of solar panels would be reduced by increasing training samples in our future researches.

5. Application to Buildings Damaged by a Typhoon

In 9 September 2019, the typhoon Faxai attacked the eastern part of Japan. Coastal areas in Chiba prefecture (see

Figure 1a) were severely damaged by the strong winds of the typhoon, and the number of the affected buildings exceeded 65,000 in the Chiba prefecture. Kyonan town, located in the western coastal area of the Chiba prefecture, was one of the severely damaged areas by the typhoon.

Figure 12 shows an aerial photograph captured at the town from a helicopter immediately after the typhoon [

40]. Since the building roofs were seriously damaged by the strong winds, we can find numbers of building roofs being covered with blue tarps.

The damage of the buildings was generated by the vibrations of ground, foundations and superstructures in earthquake disasters. Buildings are damaged by the unilateral force of winds in typhoon disasters. Whereas most elements of buildings including foundations are affected by earthquakes, the outer parts of buildings, including roofs and walls, were mainly damaged by typhoons. Although the failure mechanism of wind-induced building damage was slightly different from that of an earthquake-induced damage, the damage classification of the BRI-data shown in

Table 1 has been widely used in Japan, also for building damage assessments in typhoon disasters. This means that the proposed damage-identification method would be applicable, not only for earthquake disasters, but also for typhoon disasters.

We apply the proposed CNN model to aerial images in Kyonan town.

Figure 13a shows the aerial image observed eighteen days after the typhoon. The target area covers the length of 3 km for the north–south direction and the width of 4 km for the east–west direction, respectively. Since the building damage inventory in this town was not available, the locations and damage grades of the buildings were visually interpreted by the authors. The damage grades were classified into three categories; collapsed, blue tarp-covered and non-collapsed.

Figure 13a also shows the distribution of the interpreted building damage. The target area included approximately 2300 buildings in total, and 18 and 729 buildings were classified as collapsed and blue tarp-covered, respectively.

The image patches were extracted from the aerial images, and the building damage for each image patch was estimated by the proposed CNN model.

Figure 13b shows the distribution of the building damage estimated by the model. The distribution of the estimated blue tarp buildings show good agreement with that of the visually interpreted ones.

Table 8 summarizes the classification accuracies of the CNN model. The result shows that more than 90% of the buildings are correctly classified. The severely damaged buildings, however, were slightly overestimated by the CNN model. The damage level was overestimated when rubbles or small objects were distributed around the buildings, and solar panels were installed on the building roof as shown in

Figure 11c. Whereas such overestimations were found in some buildings, we confirmed that the proposed CNN worked well for the identification of building damage, not only by the earthquakes but also by the typhoon. The methodology introduced in this study would be useful for the rapid identification of damage distribution, only from post-disaster aerial images.

6. Conclusions

In this study, the convolutional neural network (CNN)-based methodology for identifying the building damage from post-disaster aerial images was developed using the building damage inventories and the aerial images obtained in the 2016 Kumamoto, and the 1995 Kobe, Japan earthquakes. The building damage was classified into three categories; collapsed, non-collapsed and blue tarp-covered buildings. Considering the damage classification in the inventories, collapse and non-collapse were defined as D5–D6/major damage and as D0–D1/negligible damage, respectively. We confirmed that blue tarp-covered buildings predominantly represented an intermediate damage level such as D2–D3/moderate damage.

The CNN model was developed based on the training and validation samples extracted from the aerial images and the damage inventories. The CNN architecture consisted of four feature extraction steps by the convolutional, nonlinearity and pooling layers. Dropout and batch normalization were also employed in the CNN model. The result for the validation data showed that more than 97% of the buildings were correctly classified by the CNN model. The model was applied to all the building data to estimate the damage distributions in the 2016 Kumamoto and 1995 Kobe earthquakes. Whereas some discrepancies of damage grades were found between the inventories and the estimations, the estimated damage distributions showed agreement with those in the damage inventories.

The proposed CNN model was also applied to the aerial images in Kyonan town, Chiba prefecture, affected by the typhoon in September 2019. The locations and damage grades were visually interpreted. The estimated damage distribution shows good agreement with the visually interpreted one. The accuracy assessment revealed that approximately 94% of the buildings were correctly classified by the CNN model. These results indicated that the proposed CNN model would be useful for the rapid identification of damage distribution using post-disaster aerial images.

We found the over- and underestimations of our results because it was difficult to discriminate from small features around the buildings and solar panels on building roofs from building rubbles, and to identify soft-story collapse from the images. It was also difficult to accurately classify negligible and moderate damage such as hair-line cracks in walls. Although we confirmed the limitations of remote-sensing-based damage detections, we intended to develop more a accurate classification technique by increasing the appropriate training samples such as soft-story collapse and solar panels on the roofs.

Finally, we proposed a considerable framework for the quick and efficient damage assessment including remote-sensing-based damage detections. In actual post-disaster responses, firstly obtained damage information needs to be continuously updated when new damage information was gathered from other data sources. Our proposed method would be useful in the first phase of the damage assessment because it can provide damage distribution building by building, more rapidly than visual interpretation. Estimated damage maps could be updated by visually screening the estimated building damage and by additionally classifying the moderate/heavy damage levels from visual interpretations in the second phase. The damage maps could be further updated by including detailed damage information obtained in field investigations at the third phase. Such multi-phased damage assessment could provide more reliable damage maps for efficient disaster responses.