Landslide Susceptibility Modeling: An Integrated Novel Method Based on Machine Learning Feature Transformation

Abstract

:1. Introduction

2. Study Area and Dataset

2.1. Study Area

2.2. Dataset

2.2.1. Landslide Inventory

2.2.2. Landslide Conditioning Factors

3. Methods

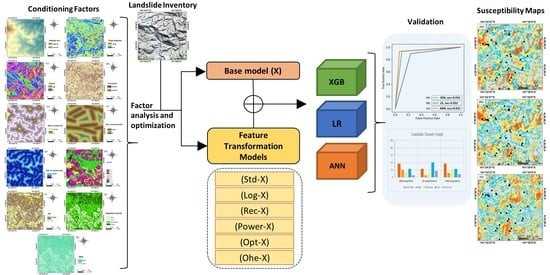

3.1. Overall Workflow

3.2. Feature Transformations

3.3. Modeling Methods

3.3.1. Extreme Gradient Boosting (XGB)

3.3.2. Logistic Regression (LR)

3.3.3. Artificial Neural Networks (ANN)

3.4. Evaluation Metrics

3.4.1. The 10-Fold Cross-Validation

3.4.2. Receiver Operating Characteristics (ROC)

4. Results

4.1. Descriptive Statistics of the Data

4.2. Impact of the Feature Transformations Applied to the Benchmark Models

4.2.1. Impact of the Feature Transformations on XGB

4.2.2. Impact of the Feature Transformations on LR

4.2.3. Impact of the Feature Transformations on ANN

4.3. Landslide Susceptibility Maps

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kavzoglu, T.; Colkesen, I.; Sahin, E.K. Machine learning techniques in landslide susceptibility mapping: A survey and a case study. Landslides Theory Pract. Model. 2018, 50, 283–301. [Google Scholar] [CrossRef]

- Pradhan, B.; Al-Najjar, H.A.H.; Sameen, M.I.; Mezaal, M.R.; Alamri, A.M. Landslide detection using a saliency feature enhancement technique from LIDAR-derived DEM and orthophotos. IEEE Access 2020, 8, 121942–121954. [Google Scholar] [CrossRef]

- Sameen, M.I.; Pradhan, B.; Bui, D.T.; Alamri, A.M. Systematic sample subdividing strategy for training landslide susceptibility models. CATENA 2019, 187, 104358. [Google Scholar] [CrossRef]

- Arabameri, A.; Pal, S.C.; Rezaie, F.; Chakrabortty, R.; Saha, A.; Blaschke, T.; Di Napoli, M.; Ghorbanzadeh, O.; Ngo, P.T.T. Decision tree based ensemble machine learning approaches for landslide susceptibility mapping. Geocarto Int. 2021, 2021, 1–35. [Google Scholar] [CrossRef]

- Napoli, M.D.; Martire, D.D.; Bausilio, G.; Calcaterra, D.; Confuorto, P.; Firpo, M.; Pepe, G.; Cevasco, A. Rainfall-induced shallow land-slide detachment, transit and runout susceptibility mapping by integrating machine learning techniques and GIS-based approaches. Water 2021, 13, 488. [Google Scholar] [CrossRef]

- Novellino, A.; Cesarano, M.; Cappelletti, P.; Di Martire, D.; Di Napoli, M.; Ramondini, M.; Sowter, A.; Calcaterra, D. Slow-moving landslide risk assessment combining Machine Learning and InSAR techniques. CATENA 2021, 203, 105317. [Google Scholar] [CrossRef]

- Pradhan, B.; Seeni, M.I.; Kalantar, B. Performance Evaluation and Sensitivity Analysis of Expert-Based, Statistical, Machine Learning, and Hybrid Models for Producing Landslide Susceptibility Maps. In Laser Scanning Applications in Landslide Assessment; Pradhan, B., Ed.; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Géron, A. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build. Intelligent Systems, 2nd ed.; O’Reilly Media: Sebastopol, CA, USA, 2019. [Google Scholar]

- Brownlee, J. Master Machine Learning Algorithms: Discover How They Work and Implemen Them from Scratch; Machine Learning Mastery: Vermont, Australia, 2016. [Google Scholar]

- Box, G.E.P.; Cox, D.R. An analysis of transformations. J. R. Stat. Soc. Ser. B 1964, 26, 211–243. [Google Scholar] [CrossRef]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef]

- Coates, A.; Ng, A.; Lee, H. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the 14th International Conference on Artificial Intelligence and Statistics, Ft. Lauderdale, FL, USA, 11–13 April 2011; pp. 215–223. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jolliffe, I.T. Principal component analysis for special types of data. Prin. Compon. Anal. 2002, 2002, 338–372. [Google Scholar]

- Olshausen, B.A.; Field, D.J. Emergence of simple-cell receptive field properties by learning a sparse code for natural images. Nature 1996, 381, 607–609. [Google Scholar] [CrossRef] [PubMed]

- Pechenizkiy, M.; Tsymbal, A.; Puuronen, S. PCA-based feature transformation for classification: Issues in medical diagnostics. In Proceedings of the 17th IEEE Symposium on Computer-Based Medical Systems, Bethesda, MD, USA, 25 June 2004. [Google Scholar] [CrossRef] [Green Version]

- Haixiang, G.; Yijing, L.; Shang, J.; Mingyun, G.; Yuanyue, H.; Bing, G. Learning from class-imbalanced data: Review of methods and applications. Expert Syst. Appl. 2017, 73, 220–239. [Google Scholar] [CrossRef]

- Kim, K.-J.; Lee, W.B. Stock market prediction using artificial neural networks with optimal feature transformation. Neural Comput. Appl. 2004, 13, 255–260. [Google Scholar] [CrossRef]

- Abe, M.; Aoki, K.; Ateniese, G.; Avanzi, R.; Beerliová, Z.; Billet, O. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); 3960 LNCS:VI; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- García, V.; Sánchez, J.; Mollineda, R. On the effectiveness of preprocessing methods when dealing with different levels of class imbalance. Knowl.-Based Syst. 2012, 25, 13–21. [Google Scholar] [CrossRef]

- Hussin, H.Y.; Zumpano, V.; Reichenbach, P.; Sterlacchini, S.; Micu, M.; van Westen, C.; Bălteanu, D. Different landslide sampling strategies in a grid-based bi-variate statistical susceptibility model. Geomorphology 2016, 253, 508–523. [Google Scholar] [CrossRef]

- Mezaal, M.R.; Pradhan, B.; Sameen, M.I.; Shafri, H.Z.M.; Yusoff, Z.M. Optimized neural architecture for automatic landslide detection from high-resolution airborne laser scanning data. Appl. Sci. 2017, 7, 730. [Google Scholar] [CrossRef] [Green Version]

- Merghadi, A.; Yunus, A.P.; Dou, J.; Whiteley, J.; ThaiPham, B.; Bui, D.T.; Avtar, R.; Abderrahmane, B. Machine learning methods for landslide susceptibility studies: A comparative overview of algorithm performance. Earth-Sci. Rev. 2020, 207, 103225. [Google Scholar] [CrossRef]

- Steger, S.; Brenning, A.; Bell, R.; Glade, T. Incompleteness matters-An approach to counteract inventory-based biases in statistical landslide susceptibility modelling. In EGU General Assembly Conference Abstracts; EGU: Vienna, Austria, 2018; p. 8551. [Google Scholar]

- Canoglu, M.C.; Aksoy, H.; Ercanoglu, M. Integrated approach for determining spatio-temporal variations in the hydrodynamic factors as a contributing parameter in landslide susceptibility assessments. Bull. Int. Assoc. Eng. Geol. 2018, 78, 3159–3174. [Google Scholar] [CrossRef]

- Samia, J.; Temme, A.; Bregt, A.K.; Wallinga, J.; Stuiver, J.; Guzzetti, F.; Ardizzone, F.; Rossi, M. Implementing landslide path dependency in landslide susceptibility modelling. Landslides 2018, 15, 2129–2144. [Google Scholar] [CrossRef] [Green Version]

- Hussin, H.; Zumpano, V.; Sterlacchini, S.; Reichenbach, P.; Bãlteanu, D.; Micu, M.; Bordogna, G.; Cugini, M. Comparing the predic-tive capability of landslide susceptibility models in three different study areas using the weights of evidence technique. In EGU General Assembly Conference Abstracts; EGU2013-12701; EGU: Vienna, Austria, 2013. [Google Scholar]

- Arnone, E.; Francipane, A.; Scarbaci, A.; Puglisi, C.; Noto, L. Effect of raster resolution and polygon-conversion algorithm on landslide susceptibility mapping. Environ. Model. Softw. 2016, 84, 467–481. [Google Scholar] [CrossRef]

- Mezaal, M.R.; Pradhan, B.; Rizeei, H.M. Improving landslide detection from airborne laser scanning data using optimized dempster–shafer. Remote. Sens. 2018, 10, 1029. [Google Scholar] [CrossRef] [Green Version]

- Pradhan, B. Laser Scanning Applications in Landslide Assessment; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar] [CrossRef]

- Soma, A.S.; Kubota, T.; Mizuno, H. Optimization of causative factors using logistic regression and artificial neural network models for landslide susceptibility assessment in Ujung Loe Watershed, South Sulawesi Indonesia. J. Mt. Sci. 2019, 16, 383–401. [Google Scholar] [CrossRef]

- Feizizadeh, B.; Roodposhti, M.S.; Blaschke, T.; Aryal, J. Comparing GIS-based support vector machine kernel functions for landslide susceptibility mapping. Arab. J. Geosci. 2017, 10, 122. [Google Scholar] [CrossRef]

- Pradhan, B. A comparative study on the predictive ability of the decision tree, support vector machine and neuro-fuzzy models in landslide susceptibility mapping using GIS. Comput. Geosci. 2013, 51, 350–365. [Google Scholar] [CrossRef]

- Roy, A.C.; Islam, M. Predicting the Probability of Landslide using Artificial Neural Network. In Proceedings of the 5th International Conference on Advances in Electrical Engineering (ICAEE), Dhaka, Bangladesh, 26–28 September 2019; pp. 874–879. [Google Scholar] [CrossRef]

- Zhang, Y.; Ge, T.; Tian, W.; Liou, Y.A. Debris flow susceptibility mapping using machine-learning techniques in Shigatse area, China. Remote. Sens. 2019, 11, 2801. [Google Scholar] [CrossRef] [Green Version]

- Yousefi, S.; Pourghasemi, H.R.; Emami, S.N.; Pouyan, S.; Eskandari, S.; Tiefenbacher, J.P. A machine learning framework for multi-hazards modeling and mapping in a mountainous area. Sci. Rep. 2020, 10, 12144. [Google Scholar] [CrossRef] [PubMed]

- Nhu, V.-H.; Shirzadi, A.; Shahabi, H.; Singh, S.K.; Al-Ansari, N.; Clague, J.J.; Jaafari, A.; Chen, W.; Miraki, S.; Dou, J.; et al. Shallow landslide susceptibility mapping: A comparison between logistic model tree, logistic regression, naïve bayes tree, artificial neural network, and support vector machine algorithms. Int. J. Environ. Res. Public Health 2020, 17, 2749. [Google Scholar] [CrossRef] [PubMed]

- Schlögel, R.; Marchesini, I.; Alvioli, M.; Reichenbach, P.; Rossi, M.; Malet, J.-P. Optimizing landslide susceptibility zonation: Effects of DEM spatial resolution and slope unit delineation on logistic regression models. Geomorphology 2017, 301, 10–20. [Google Scholar] [CrossRef]

- Conoscenti, C.; Rotigliano, E.; Cama, M.; Arias, N.A.C.; Lombardo, L.; Agnesi, V. Exploring the effect of absence selection on landslide susceptibility models: A case study in Sicily, Italy. Geomorphology 2016, 261, 222–235. [Google Scholar] [CrossRef]

- Tsangaratos, P.; Ilia, I. Comparison of a logistic regression and Naïve Bayes classifier in landslide susceptibility assessments: The influence of models complexity and training dataset size. CATENA 2016, 145, 164–179. [Google Scholar] [CrossRef]

- Pradhan, B.; Lee, S. Landslide susceptibility assessment and factor effect analysis: Backpropagation artificial neural networks and their comparison with frequency ratio and bivariate logistic regression modelling. Environ. Model. Softw. 2010, 25, 747–759. [Google Scholar] [CrossRef]

- Pradhan, B.; Lee, S. Regional landslide susceptibility analysis using back-propagation neural network model at Cameron Highland, Malaysia. Landslides 2009, 7, 13–30. [Google Scholar] [CrossRef]

- Evans, J.S.; Hudak, A.T. A multiscale curvature algorithm for classifying discrete return LiDAR in forested environments. IEEE Trans. Geosci. Remote. Sens. 2007, 45, 1029–1038. [Google Scholar] [CrossRef]

- Jebur, M.N.; Pradhan, B.; Tehrany, M.S. Optimization of landslide conditioning factors using very high-resolution airborne laser scanning (LiDAR) data at catchment scale. Remote. Sens. Environ. 2014, 152, 150–165. [Google Scholar] [CrossRef]

- Al-Najjar, H.A.H.; Kalantar, B.; Pradhan, B.; Saeidi, V. Conditioning factor determination for mapping and prediction of landslide susceptibility using machine learning algorithms. In Proceedings of the Proceedings Volume 11156, Earth Resources and Environmental Remote Sensing/GIS Applications X, Strasbourg, France, 10–12 September 2019; p. 111560. [Google Scholar] [CrossRef]

- Wilson, J.P.; Gallant, J.C. Terrain Analysis: Principles and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2000. [Google Scholar]

- Lee, S.; Ryu, J.-H.; Min, K.; Won, J.-S. Landslide susceptibility analysis using GIS and artificial neural network. Earth Surf. Process. Landforms 2003, 28, 1361–1376. [Google Scholar] [CrossRef]

- Zheng, A.; Casari, A. Feature Engineering for Machine Learning: Principles and Techniques for Data Scientists; O’Reilly Media, Inc.: Newton, CA, USA, 2018. [Google Scholar]

- Heaton, J. An empirical analysis of feature engineering for predictive modeling. In Proceedings of the SoutheastCon 2016, Norfolk, VA, USA, 30 March–3 April 2016; pp. 1–6. [Google Scholar] [CrossRef] [Green Version]

- Auer, T. Pre-Processing Data. 2019. Available online: https://scikit-learn.org/stable/modules/preprocessing.html#standardization-or-mean-removal-and-variance-scaling (accessed on 1 March 2021).

- Ray, S. A Comprehensive Guide to Data Exploration. 2016. Available online: https://www.analyticsvidhya.com/blog/2016/01/guide-data-exploration/ (accessed on 1 March 2021).

- sklearn.preprocessing.MinMaxScaler. 2020. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.MinMaxScaler.html (accessed on 1 March 2021).

- Sarkar, D. Continuous Numeric Data. 2018. Available online: https://towardsdatascience.com/understanding-feature-engineering-part-1-continuous-numeric-data-da4e47099a7b (accessed on 15 December 2020).

- Calculus, F. Reciprocal Function. 2011. Available online: https://calculus.subwiki.org/wiki/Reciprocal_function (accessed on 10 April 2021).

- Power Functions. 2020. Available online: https://www.brightstorm.com/math/precalculus/polynomial-and-rational-functions/power-functions/ (accessed on 10 April 2021).

- Ronaghan, S. The Mathematics of Decision Trees, Random Forest and Feature Importance in Scikit-Learn and Spark. Towards Data Science. 2018. Available online: https://towardsdatascience.com/the-mathematics-of-decision-trees-random-forest-and-feature-importance-in-scikit-learn-and-spark-f2861df67e3 (accessed on 1 March 2021).

- Micheletti, N.; Foresti, L.; Robert, S.; Leuenberger, M.; Pedrazzini, A.; Jaboyedoff, M.; Kanevski, M. Machine learning feature selection methods for landslide susceptibility mapping. Math. Geosci. 2014, 46, 33–57. [Google Scholar] [CrossRef] [Green Version]

- Brownlee, J. Why One-Hot Encode Data in Machine Learning. Mach. Learn. Mastery 2017. Available online: https://machinelearningmastery.com/why-one-hot-encode-data-in-machine-learning/ (accessed on 1 March 2021).

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef] [Green Version]

- Dewancker, I.; McCourt, M.; Clark, S. Bayesian optimization for machine learning: A Practical Guidebook. arXiv 2016, arXiv:1612.04858. [Google Scholar]

- Sonobe, R.; Yamaya, Y.; Tani, H.; Wang, X.; Kobayashi, N.; Mochizuki, K.-I. Assessing the suitability of data from Sentinel-1A and 2A for crop classification. GIScience Remote. Sens. 2017, 54, 918–938. [Google Scholar] [CrossRef]

- Georganos, S.; Grippa, T.; VanHuysse, S.; Lennert, M.; Shimoni, M.; Kalogirou, S.; Wolff, E. Less is more: Optimizing classification performance through feature selection in a very-high-resolution remote sensing object-based urban application. GIScience Remote. Sens. 2017, 55, 221–242. [Google Scholar] [CrossRef]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing fully convolutional networks, random forest, support vector machine, and patch-based deep convolutional neural networks for object-based wetland mapping using images from small unmanned aircraft system. GIScience Remote. Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Santos, L.D. GPU Accelerated Classifier Benchmarking for Wildfire Related Tasks. Ph.D. Thesis, NOVA University of Lisbon, Lisbon, Portugal, 2018. [Google Scholar]

- Mondini, A.C.; Chang, K.T.; Chiang, S.H.; Schlögel, R.; Notarnicola, C.; Saito, H. Automatic mapping of event landslides at basin scale in Taiwan using a Montecarlo approach and synthetic land cover fingerprints. Int. J. Appl. Earth Obs. Geoinf. 2017, 63, 112–121. [Google Scholar] [CrossRef]

- Zhou, C.; Yin, K.; Cao, Y.; Ahmed, B.; Li, Y.; Catani, F.; Pourghasemi, H.R. Landslide susceptibility modeling applying machine learning methods: A case study from Longju in the Three Gorges Reservoir area, China. Comput. Geosci. 2018, 112, 23–37. [Google Scholar] [CrossRef] [Green Version]

- Sharma, N.; Chakrabarti, A.; Balas, V.E. Data management, analytics and innovation. In Proceedings of the ICDMAI, Macao, China, 8–11 April 2019. [Google Scholar] [CrossRef]

- Lee, S.; Pradhan, B. Landslide hazard mapping at Selangor, Malaysia using frequency ratio and logistic regression models. Landslides 2006, 4, 33–41. [Google Scholar] [CrossRef]

- Berrar, D. Cross-validation. J. Math. Psychol. 2019, 1, 542–545. [Google Scholar] [CrossRef]

- Kalantar, B.; Pradhan, B.; Naghibi, S.A.; Motevalli, A.; Mansor, S. Assessment of the effects of training data selection on the land-slide susceptibility mapping: A comparison between support vector machine (SVM), logistic regression (LR) and artificial neural networks (ANN). Geomat. Nat. Hazards Risk 2018, 9, 49–69. [Google Scholar] [CrossRef]

- Huang, Y.; Zhao, L. Review on landslide susceptibility mapping using support vector machines. CATENA 2018, 165, 520–529. [Google Scholar] [CrossRef]

- Pham, B.T.; Bui, D.T.; Prakash, I.; Dholakia, M. Hybrid integration of multilayer perceptron neural networks and machine learning ensembles for landslide susceptibility assessment at Himalayan area (India) using GIS. CATENA 2017, 149, 52–63. [Google Scholar] [CrossRef]

- Wang, L.J.; Guo, M.; Sawada, K.; Lin, J.; Zhang, J. A comparative study of landslide susceptibility maps using logistic regression, frequency ratio, decision tree, weights of evidence and artificial neural network. Geosci. J. 2016, 20, 117–136. [Google Scholar] [CrossRef]

| Transformation Approach | Function | Description |

|---|---|---|

| Minimax normalization (Std-X) | When , y is equal to , whereas when , the value of is equal to . This implies that the total range of varied in a range from 0 to 1 [52]. | |

| Logarithmic functions (Log-X) | Logarithmic functions are the inverses of exponential functions. The reverse of the exponential function is [53]. | |

| Reciprocal function (Rec-X) | The reciprocal function is a function characterized by the set of nonzero real numbers, which sends every real number to its reciprocal value [54]. | |

| Power functions (Power-X) | All power functions pass through the point (1,1) on the coordinate plane. It is a function where, so that n is any real constant number [55]. | |

| Optimal features selected by random forest (Opt-X) | Random forests are comprised of from 4 to 12 hundred choices of trees. Each tree has an additional order of Yes/No inquiries dependent on a singular or mixture of features. The importance of each feature is extracted from how “pure” every lot is [56,57]. | |

| One-hot encoding (Ohe-X) applied to land use and vegetation density | Land use and vegetation density encoding | For categorical variables, no ordinal relationship exists, and the integer encoding is not sufficient. One-hot encoding is applied to the integer representations. This is the place the integer encoded variable is unconcerned and another paired variable is added for every unique integer number [58]. |

| Item | Slope | Curvature | Aspect | Distance to Lineament | Distance to Road | Distance to Stream | Altitude | TRI |

|---|---|---|---|---|---|---|---|---|

| mean | 32.16 | −1.05 | 180.18 | 108.43 | 74.19 | 37.92 | 1581.65 | 49.53 |

| std | 14.96 | 19.61 | 107.93 | 75.37 | 80.28 | 27.73 | 76.86 | 14.64 |

| min | 3.08 | −83.54 | 4.31 | 1.41 | 2.24 | 0.00 | 1466.28 | 17.17 |

| 25% | 22.59 | −12.28 | 87.72 | 51.24 | 17.56 | 13.97 | 1520.74 | 42.97 |

| 50% | 34.89 | −0.88 | 175.74 | 89.22 | 48.52 | 31.96 | 1574.02 | 53.12 |

| 75% | 43.14 | 6.97 | 281.97 | 150.93 | 100.16 | 56.19 | 1620.19 | 58.46 |

| max | 61.14 | 47.95 | 359.57 | 298.65 | 343.42 | 107.01 | 1795.60 | 88.25 |

| Model | Accuracies | Percentage of Improvement from the Base Model | ||||

|---|---|---|---|---|---|---|

| XGB | LR | ANN | XGB | LR | ANN | |

| X | 87.546 | 83.434 | 52.244 | - | - | - |

| Std-X | 87.546 | 88.760 | 86.807 | 0.000 | 5.326 | 34.563 |

| Log-X | 81.880 | 82.125 | 86.974 | −5.666 | −1.309 | 34.730 |

| Rec-X | 81.282 | 77.365 | 82.888 | −6.264 | −6.069 | 30.644 |

| Power-X | 87.546 | 89.983 | 85.708 | 0.000 | 6.549 | 33.464 |

| Opt-X | 75.427 | 86.411 | 86.101 | −12.119 | 2.977 | 33.857 |

| Ohe-X | 86.544 | 89.960 | 89.398 | −1.002 | 6.526 | 37.154 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Najjar, H.A.H.; Pradhan, B.; Kalantar, B.; Sameen, M.I.; Santosh, M.; Alamri, A. Landslide Susceptibility Modeling: An Integrated Novel Method Based on Machine Learning Feature Transformation. Remote Sens. 2021, 13, 3281. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13163281

Al-Najjar HAH, Pradhan B, Kalantar B, Sameen MI, Santosh M, Alamri A. Landslide Susceptibility Modeling: An Integrated Novel Method Based on Machine Learning Feature Transformation. Remote Sensing. 2021; 13(16):3281. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13163281

Chicago/Turabian StyleAl-Najjar, Husam A. H., Biswajeet Pradhan, Bahareh Kalantar, Maher Ibrahim Sameen, M. Santosh, and Abdullah Alamri. 2021. "Landslide Susceptibility Modeling: An Integrated Novel Method Based on Machine Learning Feature Transformation" Remote Sensing 13, no. 16: 3281. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13163281