Geographic Object-Based Image Analysis: A Primer and Future Directions

Abstract

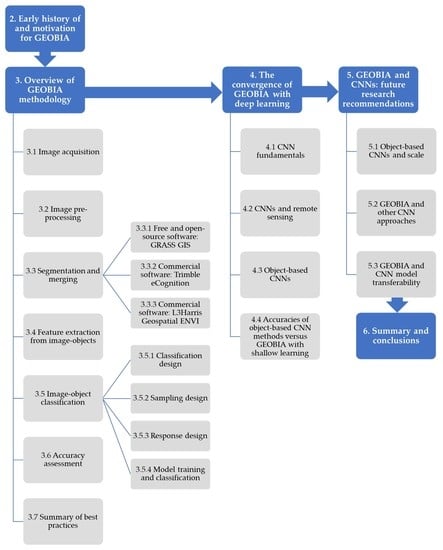

:1. Introduction

2. Early History of and Motivation for GEOBIA

3. Overview of GEOBIA Methodology

3.1. H-Resolution Image Acquisition

3.2. Image and Ancillary Data Pre-Processing

3.3. Classification Design

3.4. Segmentation and Merging

3.4.1. Free and Open-Source Software: GRASS GIS

3.4.2. Commercial Software: Trimble eCognition

3.4.3. Commercial Software: L3Harris Geospatial ENVI FX

3.5. Feature Extraction and Feature Space Reduction

3.6. Image-Object Classification

3.6.1. Sampling Design

3.6.2. Response Design

3.6.3. Model Training and Classification

3.7. Accuracy Assessment

3.8. Summary of Best Practices

4. The Convergence of GEOBIA with Convolutional Neural Networks

4.1. CNN Fundamentals

4.2. CNNs and Remote Sensing

4.3. Per-Pixel Classification with CNNs

4.4. Geographic Object-Based CNNs (GEOCNNs)

- ▪

- Approach 1 includes: (i) image segmentation; (ii) CNN training patch extraction; (iii) CNN model training; (iv) CNN model inference to output a classification map; (v) superimposition of the segment boundaries on the classification map; and (vi) segment classification based on the majority class (Figure 5) [100,102,109,110,111,112,113,114]. Some studies used random sampling to generate training patch locations (e.g., [102,112]), whereas other studies used image segments as guides, with each training patch enclosing or containing part of an image segment (e.g., [109,110,114]). Approach 1 has been used with patch-based CNNs as well as FCNs.

- ▪

- Approach 2 is another popular methodology that includes: (i) image segmentation; (ii) extraction of CNN training patches that enclose or are within training segments; (iii) CNN model training; (iv) CNN model inference on patches that enclose or are within segments; and (v) segment classification based on the class of the corresponding patch (Figure 5) [92,101,103,104,115]. This approach has been demonstrated with patch-based CNNs, and one major motivation is to reduce computational demand by replacing the sliding window approach of patch-based CNNs with fewer classifications (as few as one) per segment [103]. However, there are challenges with Approach 2. First, by relying on fewer classified pixels to determine the class of each image segment, Approach 2 is less robust and has a lower fault tolerance than the per-pixel inference and use of the majority class in Approach 1 [112]. Second, training and inference patches are extracted based on segment geometrical properties such as their center [92,103,104]. For irregularly shaped segments, such as those with curved boundaries, the central pixel of the training and inference patch may be located outside of the segment’s boundary and within a different class, which would negatively impact training and inference [92].

- ▪

- Approach 3 is a less-common approach, which includes: (i) image segmentation; (ii) CNN model training which incorporates object-based information; and (iii) CNN model inference to output a classification map (Figure 5) [98,105,116]. Jozdani et al. [98] incorporated object-based information by enclosing image segments with training patches. Papadomanolaki et al. [105] incorporated an object-based loss term in the training of their GEOCNN. During training, the typical classification loss was calculated in the forward pass (based on whether the predicted class of each pixel matched the corresponding reference class). An additional object-based loss was also calculated, which was based on whether the predicted class of each pixel matched the majority predicted class of the segment that contained the pixel [105]. We note, however, that Papadomanolaki et al. [105] used a superpixel (over-)segmentation algorithm instead of an object-based segmentation algorithm (e.g., eCognition’s Multiresolution Segmentation), so it is unclear whether this example can be considered object-based. Poomani et al. [116] extracted conventional GEOBIA features (texture, edge, and shape) from image segments. Their custom CNN model, which used SVM instead of a softmax classifier, was trained using CNN-derived and human-engineered features [116].

- ▪

- Approach 4 is less-commonly used, and includes: (i) CNN model training; (ii) CNN model inference to output a classification map; and (iii) classification map segmentation and refinement (Figure 5) [117]. Timilsina et al. [117] performed object-based binary classification with a CNN, where a CNN model was trained and used for inference to produce a classification probability map. The probability map was segmented using the multiresolution segmentation algorithm. The probability map, a canopy height model, and a normalized difference vegetation index image were superimposed to classify the segments as tree canopy and non-tree-canopy based on manually defined thresholds.

4.5. Accuracies of GEOCNN Methods Versus Conventional GEOBIA

5. GEOBIA and CNNs: Future Research Recommendations

5.1. GEOCNNs and Scale

5.2. GEOBIA and other CNN Approaches

5.3. GEOBIA and CNN Model Transferability

6. Summary

- ▪

- GEOBIA has been an active research field since the early 2000s, with over 600 publications since 2000 [2]. GEOBIA defines and examines image-objects: groups of neighboring pixels that represent real-world geographic objects. GEOBIA is a multiscale image analysis framework in which geographic objects of multiple scales are spatially modeled (as image-objects) based on their internal characteristics and their relationships with other objects.

- ▪

- The advantages of GEOBIA over pixel-based classification include: (i) the partitioning of images into image-objects mimics human visual interpretation; (ii) analyzing image-objects provides additional information (e.g., texture, geometry, and contextual relations); (iii) image-objects can more easily be integrated into a geographic information system (GIS); and (iv) using image-objects as the basic units of analysis helps mitigate the modifiable areal unit problem in remote sensing [1].

- ▪

- Many free and open-source and commercial GEOBIA software options exist. Three options that were discussed in this paper include: (1) a Python-programmed GEOBIA processing chain based on free and open-source GRASS GIS software [29]; (2) eCognition, a commercial software by Trimble [43]; and (3) ENVI FX, a commercial software by L3Harris Geospatial [44].

- ▪

- Steps in the GEOBIA methodology generally include: (1) H-resolution image acquisition; (2) image and ancillary data pre-processing; (3) classification design; (4) segmentation and merging; (5) feature extraction and feature space reduction; (6) image-object classification; and (7) accuracy assessment. Active research areas in GEOBIA methodology include improving and standardizing steps 4–7. Table 2 summarizes the requirements and best practice recommendations regarding each step.

- ▪

- There is a research gap regarding how the software-integrated segmentation optimization methods compare to each other and to newly emerging object-scale approaches (e.g., [53]).

- ▪

- Image-object classification contains numerous methodological aspects, some of which are not yet standardized. For example, there is no consensus as to which sampling units (i.e., pixels or polygons) should be used to represent test samples, though recent research suggests that polygons are becoming the standard unit [6]. Furthermore, although 50 is the minimum recommended per-class test sample size, it is unclear whether this number pertains to pixels or polygons [6].

- ▪

- We recommend the list-frame approach in conjunction with random sampling for choosing training and test samples, which avoids sampling bias due to image-object size [3,6,70]. However, this approach is not explicitly programmed into the three discussed software options and needs to be implemented by the user.

- ▪

- The standard approach for accuracy assessment is to evaluate thematic accuracy, though the research literature has increasingly evaluated segmentation accuracy [6]. However, currently there is no standard approach for matching reference polygons with GEOBIA polygons, and there is no consensus as to which segmentation accuracy metrics should be used [6].

- ▪

- Based on recent literature, we anticipate that a major future GEOBIA research direction will explore the integration of GEOBIA and deep learning, i.e., geographic object-based convolutional neural networks (GEOCNNs). We described four general GEOCNN approaches and representative literature [90,98,100,101,102,103,104,105,109,110,111,112,113,114,115,116,117].

- ▪

- We encourage future research to focus on demonstrating and evaluating different (multiscale) GEOCNN approaches and their comparison to (i) conventional GEOBIA, (ii) per-pixel classification using patch-based CNNs and (iii) fully convolutional networks (FCNs), and (iv) instance segmentation methods (i.e., Mask R-CNN). These comparisons will ideally consider thematic accuracy as well as segmentation accuracy.

- ▪

- Compared to conventional GEOBIA, CNNs require substantially larger training datasets (i.e., thousands of samples). One common strategy to meet this requirement is transfer learning, where a pre-trained model is adapted to new training data for a new classification task. Furthermore, massive Earth-observation-based training data releases (such as Microsoft’s recent releases of millions of building footprints [130,131,132]) may help progress the application of CNNs in the remote sensing domain. Training data must be as diverse as possible.

- ▪

- Finally, the “as is” transferability (with respect to geography, image source, mapping objective, etc.) of pre-trained conventional GEOBIA, CNN, and GEOCNN models should be compared and further researched.

- ▪

- Geographic object-based approaches integrating conventional GEOBIA and CNNs (i.e., GEOCNNs) are emerging as a new form of GEOBIA.

- ▪

- Continued research in these topics may further guide GEOBIA innovations and widespread utility.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Acronym | Meaning |

|---|---|

| CNN | convolutional neural network |

| DSM | digital surface model |

| DT | decision tree |

| DTM | digital terrain model |

| ESP2 | Estimation of Scale Parameter 2 |

| FCN | fully convolutional network |

| FSO | feature space optimization |

| GEOBIA | geographic object-based image analysis |

| GEOCNN | geographic object-based convolutional neural network |

| GIS | geographic information system |

| GIScience | geographic information science |

| KNN | k-nearest neighbors |

| LV | local variance |

| Mask R-CNN | Mask Region-based CNN |

| MAUP | modifiable areal unit problem |

| MI | Moran’s I |

| MLC | maximum likelihood classification |

| MRS | multiresolution segmentation |

| nDSM | normalized digital surface model |

| NN | nearest neighbor |

| OBIA | object-based image analysis |

| RF | random forest |

| SPUSPO | spatially partitioned unsupervised segmentation parameter optimization |

| SVM | support vector machine |

| TP | threshold parameter |

| VSURF | variable selection with random forests |

| WV | weighted variance |

References

- Hay, G.J.; Castilla, G. Geographic object-based image analysis (GEOBIA): A new name for a new discipline. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germany, 2008; pp. 75–89. [Google Scholar]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Queiroz Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Radoux, J.; Bogaert, P. Good Practices for Object-Based Accuracy Assessment. Remote Sens. 2017, 9, 646. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Castilla, G.; Hay, G.J. Image objects and geographic objects. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germany, 2008; pp. 91–110. [Google Scholar]

- Ye, S.; Pontius, R.G.; Rakshit, R. A review of accuracy assessment for object-based image analysis: From per-pixel to per-polygon approaches. ISPRS J. Photogramm. Remote Sens. 2018, 141, 137–147. [Google Scholar] [CrossRef]

- Hossain, M.D.; Chen, D. Segmentation for Object-Based Image Analysis (OBIA): A review of algorithms and challenges from remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2019, 150, 115–134. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Lang, S.; Hay, G.J.; Baraldi, A.; Tiede, D.; Blaschke, T. GEOBIA Achievements and Spatial Opportunities in the Era of Big Earth Observation Data. ISPRS Int. J. Geo-Inf. 2019, 8, 474. [Google Scholar] [CrossRef] [Green Version]

- Hay, G.J.; Niemann, K.O. Visualizing 3-D Texture: A Three-Dimensional Structural Approach to Model Forest Texture. Can. J. Remote Sens. 1994, 20, 90–101. [Google Scholar]

- Hay, G.J.; Niemann, K.O.; McLean, G.F. An object-specific image-texture analysis of H-resolution forest imagery. Remote Sens. Environ. 1996, 55, 108–122. [Google Scholar] [CrossRef]

- Marceau, D.J.; Howarth, P.J.; Dubois, J.M.M.; Gratton, D.J. Evaluation of the Grey-Level Co-Occurrence Matrix Method for Land-Cover Classification Using SPOT Imagery. IEEE Trans. Geosci. Remote Sens. 1990, 28, 513–519. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Hay, G.J.; Castilla, G.; Wulder, M.A.; Ruiz, J.R. An automated object-based approach for the multiscale image segmentation of forest scenes. Int. J. Appl. Earth Obs. Geoinf. 2005, 7, 339–359. [Google Scholar] [CrossRef]

- Strahler, A.H.; Woodcock, C.E.; Smith, J.A. On the Nature of Models in Remote Sensing. Remote Sens. Environ. 1986, 20, 121–139. [Google Scholar] [CrossRef]

- Lang, S. Object-based image analysis for remote sensing applications: Modeling reality—Dealing with complexity. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germany, 2008; pp. 3–27. [Google Scholar]

- Woodcock, C.E.; Strahler, A.H. The Factor of Scale in Remote Sensing. Remote Sens. Environ. 1987, 21, 311–332. [Google Scholar] [CrossRef]

- Blaschke, T.; Strobl, J. What’s wrong with pixels? Some recent developments interfacing remote sensing and GIS. Zeitschrift fur Geoinformationssysteme 2001, 14, 12–17. [Google Scholar]

- Fotheringham, A.S.; Wong, D.W.S. The modifiable areal unit problem in multivariate statistical analysis. Environ. Plan. A 1991, 23, 1025–1044. [Google Scholar] [CrossRef]

- Hay, G.J.; Blaschke, T.; Marceau, D.J.; Bouchard, A. A comparison of three image-object methods for the multiscale analysis of landscape structure. ISPRS J. Photogramm. Remote Sens 2003, 57, 327–345. [Google Scholar]

- Marceau, D.J.; Hay, G.J. Remote Sensing Contributions to the Scale Issue. Can. J. Remote Sens. 1999, 25, 357–366. [Google Scholar] [CrossRef]

- Hay, G.J.; Marceau, D.J. Multiscale Object-Specific Analysis (MOSA): An integrative approach for multiscale landscape analysis. In Remote Sensing Image Analysis: Including the Spatial Domain; de Jong, S.M., van der Meer, F.D., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherlands, 2004; Volume 5, pp. 1–33. ISBN 1-4020-2559-9. [Google Scholar]

- Hay, G.J.; Marceau, D.J.; Dubé, P.; Bouchard, A. A multiscale framework for landscape analysis: Object-specific analysis and upscaling. Landsc. Ecol. 2001, 16, 471–490. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [Green Version]

- Burnett, C.; Blaschke, T. A multi-scale segmentation/object relationship modelling methodology for landscape analysis. Ecol. Modell. 2003, 168, 233–249. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J.; Carvalho, L.M.T.; Wulder, M.A. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

- Gerçek, D.; Toprak, V.; Stroblc, J. Object-based classification of landforms based on their local geometry and geomorphometric context. Int. J. Geogr. Inf. Sci. 2011, 25, 1011–1023. [Google Scholar] [CrossRef]

- Grippa, T.; Lennert, M.; Beaumont, B.; Vanhuysse, S.; Stephenne, N.; Wolff, E. An Open-Source Semi-Automated Processing Chain for Urban Object-Based Classification. Remote Sens. 2017, 9, 358. [Google Scholar] [CrossRef] [Green Version]

- Georganos, S.; Grippa, T.; Lennert, M.; Vanhuysse, S.; Johnson, B.A.; Wolff, E. Scale Matters: Spatially Partitioned Unsupervised Segmentation Parameter Optimization for Large and Heterogeneous Satellite Images. Remote Sens. 2018, 10, 1440. [Google Scholar] [CrossRef] [Green Version]

- Griffith, D.; Hay, G. Integrating GEOBIA, Machine Learning, and Volunteered Geographic Information to Map Vegetation over Rooftops. ISPRS Int. J. Geo-Information 2018, 7, 462. [Google Scholar] [CrossRef] [Green Version]

- L3Harris Geospatial. Extract Segments Only. Available online: https://www.harrisgeospatial.com/docs/segmentonly.html (accessed on 17 April 2020).

- Chen, G.; Weng, Q.; Hay, G.J.; He, Y. Geographic object-based image analysis (GEOBIA): Emerging trends and future opportunities. GIScience Remote Sens. 2018, 55, 159–182. [Google Scholar] [CrossRef]

- Baatz, M.; Hoffmann, C.; Willhauck, G. Progressing from object-based to object-oriented image analysis. In Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germany, 2008; pp. 29–42. [Google Scholar]

- L3Harris Geospatial. Merge Algorithms Background. Available online: https://www.harrisgeospatial.com/docs/backgroundmergealgorithms.html (accessed on 17 April 2020).

- Ma, L.; Cheng, L.; Li, M.; Liu, Y.; Ma, X. Training set size, scale, and features in Geographic Object-Based Image Analysis of very high resolution unmanned aerial vehicle imagery. ISPRS J. Photogramm. Remote Sens. 2015, 102, 14–27. [Google Scholar] [CrossRef]

- Li, M.; Ma, L.; Blaschke, T.; Cheng, L.; Tiede, D. A systematic comparison of different object-based classification techniques using high spatial resolution imagery in agricultural environments. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 87–98. [Google Scholar] [CrossRef]

- Hanbury, A. Image Segmentation by Region Based and Watershed Algorithms. Wiley Encycl. Comput. Sci. Eng. 2008, 1543–1552. [Google Scholar]

- Leibniz Institute of Ecological Urban and Regional Development. Segmentation Evaluation. Available online: https://www.ioer.de/segmentation-evaluation/results.html (accessed on 17 April 2020).

- Orfeo ToolBox—Orfeo ToolBox is Not a BLACK box. Available online: https://www.orfeo-toolbox.org/ (accessed on 17 April 2020).

- InterIMAGE—Interpreting Images Freely. Available online: http://www.lvc.ele.puc-rio.br/projects/interimage/ (accessed on 17 April 2020).

- The Remote Sensing and GIS Software Library (RSGISLib). Available online: https://www.rsgislib.org/ (accessed on 17 April 2020).

- eCognition. Trimble Geospatial. Available online: https://geospatial.trimble.com/products-and-solutions/ecognition (accessed on 17 April 2020).

- ENVI—The Leading Geospatial Image Analysis Software. Available online: https://www.harrisgeospatial.com/Software-Technology/ENVI (accessed on 17 April 2020).

- ArcGIS Pro. 2D and 3D GIS Mapping Software—Esri. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 17 April 2020).

- PCI Geomatica. Available online: https://www.pcigeomatics.com/software/geomatica/professional (accessed on 17 April 2020).

- Momsen, E.; Metz, M. GRASS GIS Manual: I.segment. Available online: https://grass.osgeo.org/grass74/manuals/i.segment.html (accessed on 17 April 2020).

- Lennert, M. GRASS GIS Manual: I.cutlines. Available online: https://grass.osgeo.org/grass78/manuals/addons/i.cutlines.html (accessed on 17 April 2020).

- Lennert, M. GRASS GIS Manual: I.segment.uspo. Available online: https://grass.osgeo.org/grass78/manuals/addons/i.segment.uspo.html (accessed on 17 April 2020).

- Baatz, M.; Schäpe, A. Multiresolution Segmentation: An optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informationsverarbeitung XII; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Salzburg Geographical Materials: Salzburg, Austria, 2000; pp. 12–23. [Google Scholar]

- Trimble. Reference Book: Trimble eCognition Developer for Windows operating system; Trimble Germany GmbH: Munich, Germany, 2017; ISBN 2008000834. [Google Scholar]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, P.; Feng, X. Object-specific optimization of hierarchical multiscale segmentations for high-spatial resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 159, 308–321. [Google Scholar] [CrossRef]

- L3Harris Geospatial. Segmentation Algorithms Background. Available online: https://www.harrisgeospatial.com/docs/backgroundsegmentationalgorithm.html (accessed on 17 April 2020).

- USGS. High Resolution Orthoimagery, Los Angeles County, California, USA, Entity ID: 3527226_11SMT035485. Available online: https://earthexplorer.usgs.gov/ (accessed on 17 April 2020).

- L3Harris Geospatial. List of Attributes. Available online: https://www.harrisgeospatial.com/docs/attributelist.html (accessed on 24 May 2020).

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man. Cybern. 1973, SMC-3, 610–621. [Google Scholar]

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Ma, L.; Fu, T.; Tiede, D.; Blaschke, T.; Ma, X.; Chen, D.; Zhou, Z.; Li, M. Evaluation of Feature Selection Methods for Object-Based Land Cover Mapping of Unmanned Aerial Vehicle Imagery Using Random Forest and Support Vector Machine Classifiers. ISPRS Int. J. Geo-Inf. 2017, 6, 51. [Google Scholar] [CrossRef]

- L3Harris Geospatial. Example-Based Classification. Available online: https://www.harrisgeospatial.com/docs/example_based_classification.html (accessed on 17 April 2020).

- L3Harris Geospatial. An Interval Based Attribute Ranking Technique; L3Harris Geospatial: Boulder, CO, USA, 2007. [Google Scholar]

- Genuer, R.; Poggi, J.-M.; Tuleau-Malot, C. VSURF: An R Package for Variable Selection Using Random Forests. R J. 2015, 7, 19–33. [Google Scholar] [CrossRef] [Green Version]

- Genuer, R.; Poggi, J.-M.; Tuleau-Malot, C. Variable selection using random forests. Pattern Recognit. Lett. 2010, 31, 2225–2236. [Google Scholar] [CrossRef] [Green Version]

- Maxwell, A.E.; Strager, M.P.; Warner, T.A.; Ramezan, C.A.; Morgan, A.N.; Pauley, C.E. Large-Area, High Spatial Resolution Land Cover Mapping Using Random Forests, GEOBIA, and NAIP Orthophotography: Findings and Recommendations. Remote Sens. 2019, 11, 1409. [Google Scholar] [CrossRef] [Green Version]

- Millard, K.; Richardson, M. On the importance of training data sample selection in Random Forest image classification: A case study in peatland ecosystem mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef] [Green Version]

- Perlich, C.; Simonoff, J.S. Tree Induction vs. Logistic Regression: A Learning-Curve Analysis. J. Mach. Learn. Res. 2003, 4, 211–255. [Google Scholar]

- Müller, A.C.; Guido, S. Introduction to Machine Learning with Python; O’Reilly Media: Sebastopol, CA, USA, 2017; ISBN 9781449369903. [Google Scholar]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Stehman, S.V.; Foody, G.M. Key issues in rigorous accuracy assessment of land cover products. Remote Sens. Environ. 2019, 231, 1–23. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Maier, S.W.; Boggs, G.S. Area-based and location-based validation of classified image objects. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 117–130. [Google Scholar] [CrossRef]

- Osmólska, A.; Hawryło, P. Using a GEOBIA framework for integrating different data sources and classification methods in context of land use/land cover mapping. Geod. Cartogr. 2018, 67, 99–116. [Google Scholar]

- Liu, X.H.; Skidmore, A.K.; Van Oosten, H. Integration of classification methods for improvement of land-cover map accuracy. ISPRS J. Photogramm. Remote Sens. 2002, 56, 257–268. [Google Scholar] [CrossRef]

- Belgiu, M.; Drǎguţ, L.; Strobl, J. Quantitative evaluation of variations in rule-based classifications of land cover in urban neighbourhoods using WorldView-2 imagery. ISPRS J. Photogramm. Remote Sens. 2014, 87, 205–215. [Google Scholar] [CrossRef] [Green Version]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Strobl, C.; Malley, J.; Tutz, G. An Introduction to Recursive Partitioning: Rationale, Application, and Characteristics of Classification and Regression Trees, Bagging, and Random Forests. Psychol. Methods 2009, 14, 323–348. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Drăguţ, L. Random forest in remote sensing: A review of applications and future directions. ISPRS J. Photogramm. Remote Sens. 2016, 114, 24–31. [Google Scholar] [CrossRef]

- Chollet, F. Deep Learning with Python; Manning Publications Co.: Shelter Island, NY, USA, 2018; ISBN 9781617294433. [Google Scholar]

- Pontius, R.G., Jr.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Liu, C.; Frazier, P.; Kumar, L. Comparative assessment of the measures of thematic classification accuracy. Remote Sens. Environ. 2007, 107, 606–616. [Google Scholar] [CrossRef]

- L3Harris Geospatial. Calculate Confusion Matrices. Available online: https://www.harrisgeospatial.com/docs/CalculatingConfusionMatrices.html (accessed on 17 April 2020).

- Trimble. eCognition Developer: Tutorial 6—Working with the Accuracy Assessment Tool. Available online: https://docs.ecognition.com/v9.5.0/Resources/Images/Tutorial%206%20-%20Accuracy%20Assessment%20Tool.pdf (accessed on 17 April 2020).

- Cai, L.; Shi, W.; Miao, Z.; Hao, M. Accuracy Assessment Measures for Object Extraction from Remote Sensing Images. Remote Sens. 2018, 10, 303. [Google Scholar] [CrossRef] [Green Version]

- Chartrand, G.; Cheng, P.M.; Vorontsov, E.; Drozdzal, M.; Turcotte, S.; Pal, C.J.; Kadoury, S.; Tang, A. Deep learning: A primer for radiologists. Radiographics 2017, 37, 2113–2131. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Yamashita, R.; Nishio, M.; Do, R.K.G.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Zhang, L.; Du, B. Deep Learning for Remote Sensing Data: A Technical Tutorial on the State of the Art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. A Comprehensive Survey of Deep Learning in Remote Sensing: Theories, Tools and Challenges for the Community. J. Appl. Remote Sens. 2017, 11, 1–54. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Zhang, H.; Xue, X.; Jiang, Y.; Shen, Q. Deep learning for remote sensing image classification: A survey. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, 1–17. [Google Scholar]

- Fu, T.; Ma, L.; Li, M.; Johnson, B.A. Using convolutional neural network to identify irregular segmentation objects from very high-resolution remote sensing imagery. J. Appl. Remote Sens. 2018, 12, 1–21. [Google Scholar] [CrossRef]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pasupa, K.; Sunhem, W. A comparison between shallow and deep architecture classifiers on small dataset. In Proceedings of the International Conference on Information Technology and Electrical Engineering, Yogyakarta, Indonesia, 5–6 October 2016. [Google Scholar]

- Hay, G.J. Visualizing Scale-Domain Manifolds: A Multiscale Geo-Object-Based Approach. In Scale Issues in Remote Sensing; Weng, Q., Ed.; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2014; pp. 141–169. ISBN 978-1-118-30504-1. [Google Scholar]

- Li, Y.; Ye, S.; Bartoli, I. Semisupervised classification of hurricane damage from postevent aerial imagery using deep learning. J. Appl. Remote Sens. 2018, 12, 1–13. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Nex, F.; Vosselman, G. Disaster damage detection through synergistic use of deep learning and 3D point cloud features derived from very high resolution oblique aerial images, and multiple-kernel-learning. ISPRS J. Photogramm. Remote Sens. 2018, 140, 45–59. [Google Scholar] [CrossRef]

- Jozdani, S.E.; Johnson, B.A.; Chen, D. Comparing Deep Neural Networks, Ensemble Classifiers, and Support Vector Machine Algorithms for Object-Based Urban Land Use/Land Cover Classification. Remote Sens. 2019, 11, 1713. [Google Scholar] [CrossRef] [Green Version]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-Scale Remote-Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Du, S.; Emery, W.J. Object-Based Convolutional Neural Network for High-Resolution Imagery Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3386–3396. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. An object-based convolutional neural network (OCNN) for urban land use classification. Remote Sens. Environ. 2018, 216, 57–70. [Google Scholar] [CrossRef] [Green Version]

- Mboga, N.; Georganos, S.; Grippa, T.; Lennert, M.; Vanhuysse, S.; Wolff, E. Fully Convolutional Networks and Geographic Object-Based Image Analysis for the Classification of VHR Imagery. Remote Sens. 2019, 11, 597. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Ming, D.; Lv, X. Superpixel based land cover classification of VHR satellite image combining multi-scale CNN and scale parameter estimation. Earth Sci. Inform. 2019, 12, 341–363. [Google Scholar] [CrossRef]

- Davari Majd, R.; Momeni, M.; Moallem, P. Transferable Object-Based Framework Based on Deep Convolutional Neural Networks for Building Extraction. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2627–2635. [Google Scholar] [CrossRef]

- Papadomanolaki, M.; Vakalopoulou, M.; Karantzalos, K. A Novel Object-Based Deep Learning Framework for Semantic Segmentation of Very High-Resolution Remote Sensing Data: Comparison with Convolutional and Fully Convolutional Networks. Remote Sens. 2019, 11, 684. [Google Scholar] [CrossRef] [Green Version]

- Huang, H.; Lan, Y.; Yang, A.; Zhang, Y.; Wen, S.; Deng, J. Deep learning versus Object-based Image Analysis (OBIA) in weed mapping of UAV imagery. Int. J. Remote Sens. 2020, 41, 3446–3479. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Prakash, N.; Manconi, A.; Loew, S. Mapping Landslides on EO Data: Performance of Deep Learning Models vs. Traditional Machine Learning Models. Remote Sens. 2020, 12, 346. [Google Scholar] [CrossRef] [Green Version]

- Liu, T.; Abd-Elrahman, A.; Morton, J.; Wilhelm, V.L. Comparing fully convolutional networks, random forest, support vector machine, and patch-based deep convolutional neural networks for object-based wetland mapping using images from small unmanned aircraft system. GISci. Remote Sens. 2018, 55, 243–264. [Google Scholar] [CrossRef]

- Liu, S.; Qi, Z.; Li, X.; Yeh, A. Integration of Convolutional Neural Networks and Object-Based Post-Classification Refinement for Land Use and Land Cover Mapping with Optical and SAR Data. Remote Sens. 2019, 11, 690. [Google Scholar] [CrossRef] [Green Version]

- Feng, W.; Sui, H.; Hua, L.; Xu, C. Improved Deep Fully Convolutional Network with Superpixel-Based Conditional Random Fields for Building Extraction. Int. Geosci. Remote Sens. Symp. 2019, 52–55. [Google Scholar]

- Zhou, K.; Ming, D.; Lv, X.; Fang, J.; Wang, M. CNN-based land cover classification combining stratified segmentation and fusion of point cloud and very high-spatial resolution remote sensing image data. Remote Sens. 2019, 11, 2065. [Google Scholar] [CrossRef] [Green Version]

- Song, D.; Tan, X.; Wang, B.; Zhang, L.; Shan, X.; Cui, J. Integration of super-pixel segmentation and deep-learning methods for evaluating earthquake-damaged buildings using single-phase remote sensing imagery. Int. J. Remote Sens. 2020, 41, 1040–1066. [Google Scholar] [CrossRef]

- Sothe, C.; De Almeida, C.M.; Schimalski, M.B.; Liesenberg, V.; La Rosa, L.E.C.; Castro, J.D.B.; Feitosa, R.Q. A comparison of machine and deep-learning algorithms applied to multisource data for a subtropical forest area classification. Int. J. Remote Sens. 2019, 41, 1943–1969. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Q.; Chen, G.; Dai, F.; Zhu, K.; Gong, Y.; Xie, Y. An object-based supervised classification framework for very-high-resolution remote sensing images using convolutional neural networks. Remote Sens. Lett. 2018, 9, 373–382. [Google Scholar] [CrossRef]

- Poomani Alias Punitha, M.; Sutha, J. Object based classification of high resolution remote sensing image using HRSVM-CNN classifier. Eur. J. Remote Sens. 2019. [Google Scholar] [CrossRef] [Green Version]

- Timilsina, S.; Sharma, S.K.; Aryal, J. Mapping Urban Trees Within Cadastral Parcels Using an Object-based Convolutional Neural Network. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-5/W2, 111–117. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhang, W.; Witharana, C.; Liljedahl, A.K.; Kanevskiy, M. Deep Convolutional Neural Networks for Automated Characterization of Arctic Ice-Wedge Polygons in Very High Spatial Resolution Aerial Imagery. Remote Sens. 2018, 10, 1487. [Google Scholar] [CrossRef] [Green Version]

- Zhao, K.; Kang, J.; Jung, J.; Sohn, G.; Street, K.; Drive, M.; York, N.; Mb, O.N. Building Extraction from Satellite Images Using Mask R-CNN with Building Boundary Regularization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 247–251. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Ji, S.; Shen, Y.; Lu, M.; Zhang, Y. Building Instance Change Detection from Large-Scale Aerial Images using Convolutional Neural Networks and Simulated Samples. Remote Sens. 2019, 11, 1343. [Google Scholar] [CrossRef] [Green Version]

- Wen, Q.; Jiang, K.; Wang, W.; Liu, Q.; Guo, Q.; Li, L.; Wang, P. Automatic Building Extraction from Google Earth Images under Complex Backgrounds Based on Deep Instance Segmentation Network. Sensors (Switzerland) 2019, 19, 333. [Google Scholar] [CrossRef] [Green Version]

- Nie, S.; Jiang, Z.; Zhang, H.; Cai, B.; Yao, Y. Inshore Ship Detection Based on Mask R-CNN. Int. Geosci. Remote Sens. Symp. 2018, 693–696. [Google Scholar]

- Zhang, Y.; Zhang, Y.; Li, S.; Zhang, J. Accurate Detection of Berthing Ship Target Based on Mask R-CNN. In Proceedings of the International Conference on Image and Video Processing, and Artificial Intelligence, Shanghai, China, 15–17 August 2018; Volume 1083602, pp. 1–9. [Google Scholar]

- Feng, Y.; Diao, W.; Chang, Z.; Yan, M.; Sun, X.; Gao, X. Ship Instance Segmentation From Remote Sensing Images Using Sequence Local Context Module. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1025–1028. [Google Scholar]

- Zhao, T.; Yang, Y.; Niu, H.; Chen, Y.; Wang, D. Comparing U-Net convolutional networks with fully convolutional networks in the performances of pomegranate tree canopy segmentation. In Proceedings of the SPIE Asia-Pacific Remote Sensing Conference, Multispectral, Hyperspectral, Ultraspectral Remote Sensing Technology Techniques and Applications VII, Honolulu, HI, USA, 24–26 September 2018; Volume 10780, pp. 1–9. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, CA, USA, 3–8 December 2012; pp. 1–9. [Google Scholar]

- Guirado, E.; Tabik, S.; Alcaraz-Segura, D.; Cabello, J.; Herrera, F. Deep-learning Versus OBIA for scattered shrub detection with Google Earth Imagery: Ziziphus lotus as case study. Remote Sens. 2017, 9, 1220. [Google Scholar] [CrossRef] [Green Version]

- Bing. Microsoft Releases 18M Building Footprints in Uganda and Tanzania to Enable AI Assisted Mapping. Available online: https://blogs.bing.com/maps/2019-09/microsoft-releases-18M-building-footprints-in-uganda-and-tanzania-to-enable-ai-assisted-mapping (accessed on 17 April 2020).

- Bing. Microsoft Releases 12 million Canadian Building Footprints as Open Data. Available online: https://blogs.bing.com/maps/2019-03/microsoft-releases-12-million-canadian-building-footprints-as-open-data (accessed on 17 April 2020).

- Bing. Microsoft Releases 125 Million Building Footprints in the US as Open Data. Available online: https://blogs.bing.com/maps/2018-06/microsoft-releases-125-million-building-footprints-in-the-us-as-open-data (accessed on 17 April 2020).

- Duarte, D.; Nex, F.; Kerle, N.; Vosselman, G. Multi-resolution feature fusion for image classification of building damages with convolutional neural networks. Remote Sens. 2018, 10, 1636. [Google Scholar] [CrossRef] [Green Version]

| Spectral | Textural 1 | Geometrical | Contextual 2 |

|---|---|---|---|

| Mean | Homogeneity | Area | Length of shared border |

| Minimum | Contrast | Perimeter | Center-to-center distance |

| Maximum | Entropy | Elongation | Number of sub-objects |

| Methodological Section | Requirements and Recommendations |

|---|---|

| 3.1. H-Resolution Image Acquisition |

|

| 3.2. Image and Ancillary Data Pre-Processing |

|

| 3.3. Classification Design |

|

| 3.4. Segmentation and Merging |

|

| |

| 3.5. Feature Extraction and Feature Space Reduction |

|

| 3.6. Image-Object Classification |

|

| 3.7. Accuracy Assessment |

|

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kucharczyk, M.; Hay, G.J.; Ghaffarian, S.; Hugenholtz, C.H. Geographic Object-Based Image Analysis: A Primer and Future Directions. Remote Sens. 2020, 12, 2012. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12122012

Kucharczyk M, Hay GJ, Ghaffarian S, Hugenholtz CH. Geographic Object-Based Image Analysis: A Primer and Future Directions. Remote Sensing. 2020; 12(12):2012. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12122012

Chicago/Turabian StyleKucharczyk, Maja, Geoffrey J. Hay, Salar Ghaffarian, and Chris H. Hugenholtz. 2020. "Geographic Object-Based Image Analysis: A Primer and Future Directions" Remote Sensing 12, no. 12: 2012. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12122012