Automatic Mapping of Landslides by the ResU-Net

Abstract

:1. Introduction

2. Study Area

3. Data and Method

3.1. Data Source

3.1.1. High Resolution Satellite Images

3.1.2. Landslide Inventory Maps

3.1.3. Preparation of Training and Validation Datasets

3.2. Proposed Models

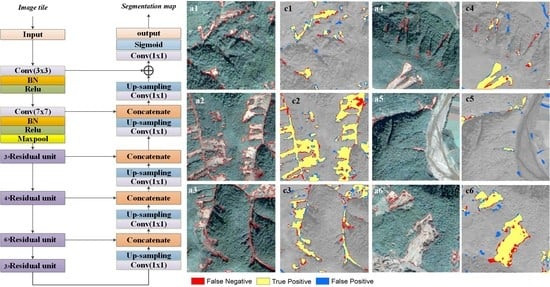

3.2.1. Baseline Model

3.2.2. Residual Learning Block

3.2.3. Proposed ResU-Net

3.3. Implementation of Training

3.4. Evaluation

4. Results

5. Discussion

5.1. Model Comparisons

5.2. Limitations of the Model

5.3. Potential Applications

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cui, P.; Chen, X.; Zhu, Y.; Su, F.; Wei, F.; Han, Y.; Liu, H.; Zhuang, J. The Wenchuan Earthquake (May 12, 2008), Sichuan Province, China, and resulting geohazards. Nat. Hazards 2011, 56, 19–36. [Google Scholar] [CrossRef]

- Huang, R.; Li, W. Analysis of the geo-hazards triggered by the 12 May 2008 Wenchuan Earthquake, China. Bull. Eng. Geol. Environ. 2009, 68, 363–371. [Google Scholar] [CrossRef]

- Qi, S.; Xu, Q.; Lan, H.; Zhang, B.; Liu, J. Spatial distribution analysis of landslides triggered by 2008.5.12 Wenchuan Earthquake, China. Eng. Geol. 2010, 116, 95–108. [Google Scholar] [CrossRef]

- Xu, C.; Xu, X.; Yao, X.; Dai, F. Three (nearly) complete inventories of landslides triggered by the May 12, 2008 Wenchuan Mw 7.9 earthquake of China and their spatial distribution statistical analysis. Landslides 2014, 11, 441–461. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Chang, K.; Wang, S.; Huang, J.; Yu, C.; Tu, J.; Chu, H.; Liu, C. Controls of preferential orientation of earthquake- and rainfall-triggered landslides in Taiwan’s orogenic mountain belt. Earth Surf. Process. Landf. 2019, 44, 1661–1674. [Google Scholar] [CrossRef]

- Keefer, D. Investigating landslides caused by earthquakes–a historical review. Surv. Geophys. 2002, 23, 473–510. [Google Scholar] [CrossRef]

- Harp, E.; Keefer, D.; Sato, H.; Yagi, H. Landslide inventories: the essential part of seismic landslide hazard analyses. Eng. Geol. 2011, 122, 9–21. [Google Scholar] [CrossRef]

- Mondini, A.; MarChesini, I.; Rossi, M.; Chang, K.; Pasquariello, G.; Guzzetti, F. Bayesian framework for mapping and classifying shallow landslides exploiting remote sensing and topographic data. Geomorphology 2013, 201, 135–147. [Google Scholar] [CrossRef]

- Keyport, R.; Oommen, T.; Martha, T.; Sajinkumar, K.; Gierke, J. A comparative analysis of pixel- and object-based detection of landslides from very high-resolution images. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 1–11. [Google Scholar] [CrossRef]

- Mondini, A.; Guzzetti, F.; Reichenbach, P.; Rossi, M.; Cardinali, M.; Ardizzone, F. Semi-automatic recognition and mapping of rainfall induced shallow landslides using optical satellite images. Remote Sens. Environ. 2011, 115, 1743–1757. [Google Scholar] [CrossRef]

- Yang, W.; Shi, P.; Liu, L. Dentifying landslides using binary logistic regression and landslide detection index, in Proceedings Earthquake-Induced Landslides, Kiryu, Japan. Springer Berl. Heidelb. 2012, 781–789. [Google Scholar] [CrossRef]

- Yang, W.; Wang, M.; Shi, P. Using MODIS NDVI time series to identify geographic patterns of landslides in vegetated regions. IEEE Geosci. Remote Sens. Lett. 2013, 10, 707–710. [Google Scholar] [CrossRef]

- Lu, P.; Qin, Y.; Li, Z.; Mondini, A.; Casagli, N. Landslide mapping from multi-sensor data through improved change detection-based Markov random field. Remote Sens. Environ. 2019, 231, 111235. [Google Scholar] [CrossRef]

- Stumpf, A.; Kerle, N. Object-oriented mapping of landslides using Random Forests. Remote Sens. Environ. 2011, 115, 2564–2577. [Google Scholar] [CrossRef]

- GSP, 216. Learning Module 6.1: Object Based Classification. Geospatial Science at Humboldt State University. Available online: http://gsp.humboldt.edu/OLM/Courses/GSP_216_Online/lesson6-1/object.html (accessed on 20 December 2019).

- Zhu, X.; Tuia, D.; Mou, L.; Xia, G.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Duric, U.; Marjanovic, M.; Radic, Z.; Abolmasov, B. Machine learning based landslide assessment of the Belgrade metropolitan area: Pixel resolution effects and a cross-scaling concept. Eng. Geol. 2019, 256, 23–38. [Google Scholar] [CrossRef]

- Jean, N.; Burke, N.; Xie, M.; Davis, W.; Lobell, D.; Ermon, S. Combining satellite imagery and machine learning to predict poverty. Science 2016, 353, 790–794. [Google Scholar] [CrossRef] [Green Version]

- DeVries, P.; Viegas, F.; Wattenberg, M.; Meade, B. Deep learning of aftershock patterns following large earthquakes. Nature 2018, 560, 632–634. [Google Scholar] [CrossRef]

- Ham, Y.; Kim, J.; Luo, J. Deep learning for multi-year ENSO forecasts. Nature 2019, 573, 568–572. [Google Scholar] [CrossRef]

- Hu, Q.; Zhou, Y.; Wang, S.; Wang, F.; Wang, H. Improving the accuracy of landslide detection in“off-site” area by machine learning model portability comparison: a case study of Jiuzhaigou Earthquake, China. Remote Sens. 2019, 11, 2530. [Google Scholar] [CrossRef] [Green Version]

- Piralilou, S.; Shahabi, H.; Jarihani, B.; Ghorbanzadeh, O.; Blaschke, T.; Gholamnia, K.; Raj Meena, S.; Aryal, J. Landslide detection using multi-scale image segmentation and different machine learning models in the Higher Himalayas. Remote Sens. 2019, 11, 2575. [Google Scholar] [CrossRef] [Green Version]

- Ye, C.; Li, Y.; Cui, P.; Liang, L.; Pirasteh, S.; Marcato, J.; Gonçalves, W.; Li, J. Landslide detection of hyperspectral remote sensing data based on deep learning with constrains. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 5047–5060. [Google Scholar] [CrossRef]

- Prakash, N.; Manconi, A.; Loew, S. Mapping landslides on EO data: performance of deep learning models vs. traditional machine learning models. Remote Sens. 2020, 12, 346. [Google Scholar] [CrossRef] [Green Version]

- Yu, B.; Chen, F.; Xu, C. Landslide detection based on contour-based deep learning framework in case of national scale of Nepal in 2015. Comput. Geosci. 2020, 135, 104388. [Google Scholar] [CrossRef]

- Zhu, L.; Huang, L.; Fan, L.; Huang, J.; Huang, F.; Chen, J.; Zhang, Z.; Wang, Y. Landslide susceptibility prediction modeling based on remote sensing and a novel deep learning algorithm of a cascade-parallel recurrent neural network. Sensors 2020, 20, 1576. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Chen, Z.; Zhang, Y.; Ouyang, C.; Zhang, F.; Ma, J. Automated Landslides Detection for Mountain Cities Using Multi-Temporal Remote Sensing Imagery. Sensors 2018, 18, 821. [Google Scholar] [CrossRef] [Green Version]

- Ghorbanzadeh, O.; Blaschke, T.; Gholamnia, K.; Meena, S.; Tiede, D.; Aryal, J. Evaluation of Different Machine Learning Methods and Deep-Learning Convolutional Neural Networks for Landslide Detection. Remote Sens. 2019, 11, 196. [Google Scholar] [CrossRef] [Green Version]

- Sameen, M. and Pradhan, B. Landslide Detection Using Residual Networks and the Fusion of Spectral and Topographic Information. IEEE Access 2019, 7, 114363–114373. [Google Scholar] [CrossRef]

- Lei, T.; Zhang, Y.; Lv, Z.; Li, S.; Liu, S.; Nandi, A. Landslide inventory mapping from bitemporal images using deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 982–986. [Google Scholar] [CrossRef]

- Wang, Y.; Fang, Z.; Hong, H. Comparison of convolutional neural networks for landslide susceptibility mapping in Yanshan County, China. Sci. Total Environ. 2019, 666, 975–993. [Google Scholar] [CrossRef] [PubMed]

- Huang, F.; Zhang, J.; Zhou, C.; Wang, Y.; Huang, J.; Zhu, L. A deep learning algorithm using a fully connected sparse autoencoder neural network for landslide susceptibility prediction. Landslides 2019, 17, 217–229. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Liu, P.; Wei, Y.; Wang, Q.; Chen, Y.; Xie, J. Research on Post-Earthquake Landslide Extraction Algorithm Based on Improved U-Net Model. Remote Sens. 2020, 12, 894. [Google Scholar] [CrossRef] [Green Version]

- Peng, J.; Fan, Z.; Wu, D.; Zhuang, J.; Dai, F.; Chen, W.; Zhao, C. Heavy rainfall triggered loess–mudstone landslide and subsequent debris flow in Tianshui, China. Eng. Geol. 2015, 186, 79–90. [Google Scholar] [CrossRef]

- Cruden, D.M.; Varnes, D.J. Landslide Types and Processes, Special Report, Transportation Research Board. Proc. Natl. Acad. Sci. USA 1996, 247, 36–75. [Google Scholar]

- Highland, L.M.; Bobrowsky, P. The Landslide Handbook—A Guide to Understanding Landslides; United States Geological Survey: Reston, VA, USA, 2008; Volume 1325, p. 129.

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Hinton, G.; Srivastava, N.; Swersky, K. Neural Networks for Machine Learning Lecture 6a Overview of Mini-Batch Gradient Descent. 2012. Available online: http://59.80.44.49/www.cs.toronto.edu/~hinton/coursera/lecture6/lec6.pdf (accessed on 10 August 2019).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar] [CrossRef] [Green Version]

- Tang, C.; Tanyas, H.; van Westen, C.; Tang, C.; Fan, X.; Jetten, V.G. Analysing post-earthquake mass movement volume dynamics with multi-source DEMs. Eng. Geol. 2019, 248, 89–101. [Google Scholar] [CrossRef]

- Reichenbach, P.; Rossi, M.; Malamud, B.; Mihir, M.; Guzzetti, F. A review of statistically-based landslide susceptibility models. Earth Sci. Rev. 2018, 180, 60–91. [Google Scholar] [CrossRef]

| Unit | Convolutional Layer | Filter Size, Channels | Output Size (Width × Height × Channels) | |

|---|---|---|---|---|

| Input | 600 × 600 × 3 | |||

| Encoding path | Head | conv 1 | 3 × 3, 64 | 600 × 600 × 64 |

| conv 2 | 7 × 7, 64 | 300 × 300 × 64 | ||

| max pool | 3 × 3, 64 | 150 × 150 × 64 | ||

| Residual unit_1 | conv 3-11 | 150 × 150 × 256 | ||

| Residual unit_2 | conv 12-23 | 75 × 75 × 512 | ||

| Residual unit_3 | conv 24-41 | 38 × 38 × 1024 | ||

| Residual unit_4 | conv 42-50 | 19 × 19 × 2048 | ||

| Decoding path | Concatenate_1 | conv 51 | 1 × 1, 1024 | 38 × 38 × 1024 |

| Concatenate_2 | conv 52 | 1 × 1, 512 | 75 × 75 × 512 | |

| Concatenate_3 | conv 53 | 1 × 1, 256 | 150 × 150 × 256 | |

| Concatenate_4 | conv 54 | 1 × 1, 64 | 300 × 300 × 64 | |

| Addition | conv 55 | 1 × 1, 64 | 600 × 600 × 64 | |

| Output | conv 56 | 1 × 1, 3 | 600 × 600 × 3 | |

| Model | Precision (%) | Recall (%) | F1 (%) |

|---|---|---|---|

| U-Net | 0.93 | 0.70 | 0.80 |

| ResU-Net | 0.96 | 0.83 | 0.89 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qi, W.; Wei, M.; Yang, W.; Xu, C.; Ma, C. Automatic Mapping of Landslides by the ResU-Net. Remote Sens. 2020, 12, 2487. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12152487

Qi W, Wei M, Yang W, Xu C, Ma C. Automatic Mapping of Landslides by the ResU-Net. Remote Sensing. 2020; 12(15):2487. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12152487

Chicago/Turabian StyleQi, Wenwen, Mengfei Wei, Wentao Yang, Chong Xu, and Chao Ma. 2020. "Automatic Mapping of Landslides by the ResU-Net" Remote Sensing 12, no. 15: 2487. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12152487