1. Introduction

Acceleration of urbanization and the proposal of smart city brings new demands to the refinement of urban governance. Spatial pattern of urban land use, which affects urban activities, is an important information for urban investigation, modeling, and resource allocation [

1,

2,

3,

4]. Traditional methods for mapping urban land use rely on remote sensing, and identified land patches are relatively fragmented, which differ from the more regular spatial scope of urban management. Gong et al. (2019) regarded parcels which are bounded by road networks as the intrinsic segmentation of urban land use [

5]. Many scholars used OpenStreetMap (OSM) to delimit the boundary of parcel, and the methods performed well [

6,

7]. Currently, OSM is the largest project in collaborative and publicly licensed geospatial data collection, and thus it was widely used as an alternative or supplement to authoritative data in related researches [

8,

9,

10,

11]. With the continuous development and growth of OSM, the data quality is constantly improving. OSM is thus considered to be a promising data source for rapid and reliable parcel delineation to meet the needs of fine urban management [

3,

12].

Cities with a strong human activity show amazing and continuous anthropogenic change properties in urban underlying surface [

13]. Since the socio-economic characteristics have no direct relationship with the spectral reflectance that can be detected by various remote sensing sensors, it is very difficult to detect land use only from remote sensing images [

14]. With enhanced accessibility of data generated by the Internet services and mobile devices, volunteered geographic information (VGI), and open sharing data of government departments, more researchers are committed to identifying urban land use with different combinations of physical characteristics and social attributes [

15,

16,

17]. In terms of identifying residential and work functional areas, mobile signaling data and taxi trajectory data have proved effective [

18,

19,

20], but the data are difficult to obtain. Point of interest (POI) data are closely related to human life and has the advantages of large sample size and easy accessibility. For examples, Song et al. (2018) directly used POI to identify the land use of polygons obtained from high-resolution remote sensing image segmentation [

21]. Lu et al. (2020) mapped the urban spatial structure with POI [

22]. At present, most researches on urban spatial structure are focused on two-dimensional spaces, which cannot fully reflect the state of modern urban spaces characterized by various high-rise buildings [

23,

24]. Street view expands the evaluation scope from a two-dimensional plane to three-dimensional level, and enables people to observe the streetscape of a large city, thus providing the ability of close-range sensing and the ground details lacking in overhead images, as well as information about the socio-economic use of urban land. Li et al. (2017) used Google Street View to determine the land use types of different building units along the street [

25]. Cao et al. (2018) achieved good results in the urban land use recognition at pixel scale by fusing a remote sensing image and street view [

26]. Futhermore, the performance of street view in land use identification at parcel scale needs to be evaluated, which is more directly related to urban land management.

The fusion of technical sensor data with human sensor and collective sensing have good performance in urban research [

27,

28]. There is no doubt that the combination of street view and remote sensing data can improve the recognition accuracy of essential urban land use, while creative work is needed to integrate different data by developing models [

29]. In addition, although the influence of street view on the accuracy of urban land use classification was evaluated in previous studies, there is a lack of the evaluation of different feature combinations. Machine learning algorithms (MLAs) have emerged in the field of accurate predictive modeling. It involves identifying complex structures, especially irregular data, and generating high-precision predictive models. Therefore, MLAs were widely used in the predictive modeling including image classification [

30]. Among the existing MLAs, random forest (RF) is a supervised ensemble learning algorithm based on decision tree classification. It is a nonparametric classification, and therefore it does not require that values of variables follow a particular statistical distribution [

31]. Previous studies have shown that the RF algorithm has good robustness and efficiency in training data [

32,

33].

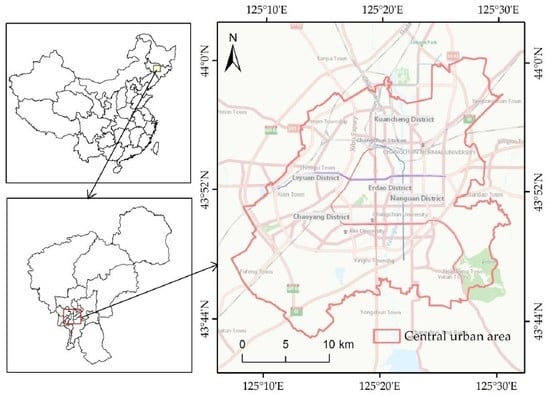

Changchun is the capital city of China’s Jilin Province, which is located in the geographic center of the Northeast China and even the Northeast Asia. As the important part of national Harbin-Changchun urban agglomeration, the development and construction of the city requires the support of urban land use data. On the basis of RF, this paper attempts to integrate remote sensing data, street view data, POI, and night light data to establish a classification method for mapping urban land use in Changchun city. Morever, we aim to assess the classification accuracies with and without street view features, and quantify the contribution of different features or combinations in the classification. This research can provide reference for urban multi-source data fusion, enrich urban feature indicators, and improve the accuracy of urban land use classification, as well as provide a technical route for management departments, scholars, and relevant consulting companies to obtain urban land use data, especially for regional research or comparative study between cities.

5. Discussion

In this study, OSM was combined to delimit boundaries of urban parcels. The results of the urban street view semantic segmentation were applied to enrich the multi-dimensional description of urban parcels, and RF was used to identify the land use of the urban parcels. The results show that based on the spatial distribution characteristics, the street view elements were related to urban functions, and it is reasonable and feasible to describe an urban parcel according to characteristics of the street view elements. The contribution of the street view characteristics to the optimal model reached 20.6%. The mixing of the land use of urban parcels is an important obstacle to accurate identification of urban land use [

67]. The accuracy of identification is related to the purity of the urban parcel. The results in this study show that the purity of residential land, industrial land, and green land is high, and thus the accuracies of their classifications are high. The land use type recorded in the survey data of the current construction land survey data of Changchun City is pure. The parcels of this data are finer than those divided by OSM, but there are no strict rules for their boundaries. There may be no roads or spaces between different functional parcels. At present, OSM is still a widely used data for segmentation, and the urban parcel boundary obtained is relatively regular, which conforms to the actual coverage of urban parcel [

67,

68].

POI data used in this study were obtained from commercial companies. The original goal of the data was to serve navigation. The type of POI does not exactly correspond to the land use of urban parcels. For example, the POI of medical facilities include clinics and pharmacies, but these facilities do not occupy separate land. Only specialized hospitals and large hospitals have independent land. Therefore, the POI data were filtered in the data preprocessing stage, and the POI characteristics contributed a lot to the accurate identification of urban parcels with independent land, such as residential and educational parcels, but not to other functional parcels. In the future, the introduction of a toponymic database managed by the government should be considered.

Street view can supplement the social attribute information of an urban parcel from the perspective of ground observation [

69]. Street view complements the lack of ground detail in top view images and provides useful auxiliary information to be transmitted to remote sensing images for improving performance [

26]. It can be seen that the introduction of street view effectively improves the accuracy of the urban land use classification of public service-type parcels, which are typically small with but with a high degree of mixing, and are difficult to identify. Street view images contain rich information, which need to be further explored [

70,

71]. The street view segmentation model used in this study was trained on the dataset of CityScapes. We need to identify the elements in the picture, and then establish features to identify urban land use type. It makes sense to build dataset directly related to urban land use in the future, although it is a complex and onerous task. For example, by collecting street view data and adding corresponding land use tags, combined with deep learning technology, it can be used to predict urban land use directly through pictures. Such a dataset would be helpful to improve the efficiency of the land use identification of urban parcels, and to provide convenience for street view applications in other cities.

6. Conclusions

Given the need of urban land use classification and the new data environment, this study applied street view data, aiming to provide reference for urban multi-source data fusion, enrich urban characteristic indicators, and provide new ideas to improve the accuracy of urban land use classification. The street view features showed better performance compared to the POI characteristics. The prediction accuracy of the area built later was higher. Since the building function in the area built earlier has changed, its street view features can not effectively express its existing functions. If the study area is divided into different areas according to the construction time, and then the urban land use prediction is carried out separately, the higher accuracy could be obtained.

Although this study attempted to impove the classification of urban land use, there are still problems in this process that need further study. First of all, urban parcels delimited by OSM have mixed land use. The land use of the parcel was determined by the actual construction land type with the largest area proportion, while urban parcels have three-dimensional characteristics. The actual use is related to the building area and the business characteristics, and thus the classification system of urban land use needs to be improved. With the emergence of spatiotemporal big data, it is necessary to introduce human activity big data with finer time and spatial resolution.