Transferability of Convolutional Neural Network Models for Identifying Damaged Buildings Due to Earthquake

Abstract

:1. Introduction

2. Study Area and Data Processing

2.1. xBD Dataset

2.2. The Wenchuan Earthquake Dataset

2.2.1. Wenchuan Dataset

2.2.2. Beichuan Dataset

2.3. Sampling

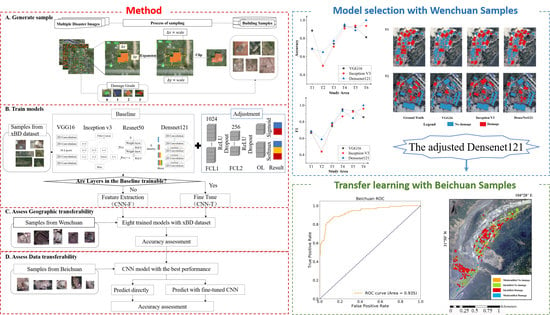

3. Methods

3.1. CNN Base Models

3.2. Training Method for the Networks

3.3. The Adjusted CNN Models and Experimental Settings

3.4. Accuracy Metrics

4. Results

4.1. CNN Performance on the xBD Dataset

4.2. Geographic Transferability of the CNN Models

4.3. Applicability of the Models in Aerial Images

5. Discussion

5.1. Impact of Sample Imbalance

5.2. Detailed Classification of Building Damage Levels

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| VHR | Very-High Resolution |

| SAR | Synthetic Aperture Radar |

| OBIA | Object-Based Image Analysis |

| CNN | Convolutional Neural Network |

| RF | Random Forest |

| SVM | Support Vector Machine |

| DL | Deep learning |

| Mw | Moment magnitude |

| RGB | Red, green, blue |

| FEMA | Federal Emergency Management Agency |

| FCL | Full Connection Layer |

| OL | Output layer |

| TP | True positive |

| TN | True negative |

| FP | False positive |

| FN | False negative |

| ReLU | Rectified linear unit |

| AUC | Area under curve |

| F1 | Harmonic mean of precision and recall |

| HPC | High-Performance Computing |

| ROC | Receiver operating characteristic curve |

| UAV | Unmanned Aerial Vehicle |

References

- Jaiswal, K.; Wald, D.J. Creating a Global Building Inventory for Earthquake Loss Assessment and Risk Management; US Geological Survey: Denver, CO, USA, 2008.

- Murakami, H. A simulation model to estimate human loss for occupants of collapsed buildings in an earthquake. In Proceedings of the Tenth World Conference on Earthquake Engineering, Madrid, Spain, 19–24 July 1992; pp. 5969–5976. [Google Scholar]

- Coburn, A.W.; Spence, R.J.; Pomonis, A. Factors determining human casualty levels in earthquakes: Mortality prediction in building collapse. In Proceedings of the Tenth World Conference on Earthquake Engineering, Madrid, Spain, 19–24 July 1992; pp. 5989–5994. [Google Scholar]

- Brunner, D.; Lemoine, G.; Bruzzone, L. Earthquake damage assessment of buildings using VHR optical and SAR imagery. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2403–2420. [Google Scholar] [CrossRef] [Green Version]

- Huyck, C.K.; Adams, B.J.; Cho, S.; Chung, H.-C.; Eguchi, R.T. Towards rapid citywide damage mapping using neighborhood edge dissimilarities in very high-resolution optical satellite imagery—Application to the 2003 Bam, Iran, earthquake. Earthq. Spectra 2005, 21, 255–266. [Google Scholar] [CrossRef]

- Janalipour, M.; Mohammadzadeh, A. Building damage detection using object-based image analysis and ANFIS from high-resolution image (Case study: BAM earthquake, Iran). IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 9, 1937–1945. [Google Scholar] [CrossRef]

- Yamazaki, F.; Liu, W. Remote sensing technologies for post-earthquake damage assessment: A case study on the 2016 Kumamoto earthquake. In Proceedings of the Keynote Lecture, 6th Asia Conference on Earthquake Engineering, Cebu City, Philippines, 22–24 September 2016. [Google Scholar]

- Pesaresi, M.; Gerhardinger, A.; Haag, F. Rapid damage assessment of built-up structures using VHR satellite data in tsunami-affected areas. Int. J. Remote Sens. 2007, 28, 3013–3036. [Google Scholar] [CrossRef]

- Ehrlich, D.; Guo, H.; Molch, K.; Ma, J.; Pesaresi, M. Identifying damage caused by the 2008 Wenchuan earthquake from VHR remote sensing data. Int. J. Digit. Earth 2009, 2, 309–326. [Google Scholar] [CrossRef]

- Li, L.; Liu, X.; Chen, Q.; Yang, S. Building damage assessment from PolSAR data using texture parameters of statistical model. Comput. Geosci. 2018, 113, 115–126. [Google Scholar] [CrossRef]

- Chen, Q.; Nie, Y.; Li, L.; Liu, X. Buildings damage assessment using texture features of polarization decomposition components. J. Remote Sens. 2017, 21, 955–965. [Google Scholar]

- Gong, L.; Wang, C.; Wu, F.; Zhang, J.; Zhang, H.; Li, Q. Earthquake-induced building damage detection with post-event sub-meter VHR TerraSAR-X staring spotlight imagery. Remote Sens. 2016, 8, 887. [Google Scholar] [CrossRef] [Green Version]

- Bai, Y.; Adriano, B.; Mas, E.; Koshimura, S. Machine Learning Based Building Damage Mapping from the ALOS-2/PALSAR-2 SAR Imagery: Case Study of 2016 Kumamoto Earthquake. J. Disaster Res. 2017, 12, 646–655. [Google Scholar] [CrossRef]

- Dou, A.; Ma, Z.; Huang, W.; Wang, X.; Yuan, X. Automatic identification approach of building damages caused by earthquake based on airborne LiDAR and multispectral imagery. Remote Sens. Inf. 2013, 28, 103–109. [Google Scholar]

- Wang, X.; Li, P. Extraction of urban building damage using spectral, height and corner information from VHR satellite images and airborne LiDAR data. ISPRS J. Photogramm. Remote Sens. 2020, 159, 322–336. [Google Scholar] [CrossRef]

- Li, M.; Cheng, L.; Gong, J.; Liu, Y.; Chen, Z.; Li, F.; Chen, G.; Chen, D.; Song, X. Post-earthquake assessment of building damage degree using LiDAR data and imagery. Sci. China Ser. E Technol. Sci. 2008, 51, 133–143. [Google Scholar] [CrossRef]

- Sun, J.; Chen, L.; Xie, Y.; Zhang, S.; Jiang, Q.; Zhou, X.; Bao, H. Disp R-CNN: Stereo 3D Object Detection via Shape Prior Guided Instance Disparity Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10548–10557. [Google Scholar]

- Franceschetti, G.; Guida, R.; Iodice, A.; Riccio, D.; Ruello, G.; Stilla, U. Building feature extraction via a deterministic approach: Application to real high resolution SAR images. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 13–27 July 2007; pp. 2681–2684. [Google Scholar]

- Xue, T.; Zhang, J.; Li, Q. Extraction of earthquake damage buildings from multi-source remote sensing data based on correlation change detection and object-oriented classification techniques. Acta Seismol. Sin. 2016, 38, 496–505. [Google Scholar]

- Tong, X.; Hong, Z.; Liu, S.; Zhang, X.; Xie, H.; Li, Z.; Yang, S.; Wang, W.; Bao, F. Building-damage detection using pre-and post-seismic high-resolution satellite stereo imagery: A case study of the May 2008 Wenchuan earthquake. ISPRS J. Photogramm. Remote Sens. 2012, 68, 13–27. [Google Scholar] [CrossRef]

- Tiede, D.; Lang, S.; Füreder, P.; Hölbling, D.; Hoffmann, C.; Zeil, P. Automated damage indication for rapid geospatial reporting. Photogramm. Eng. Remote Sens. 2011, 77, 933–942. [Google Scholar] [CrossRef]

- Song, D.; Tan, X.; Wang, B.; Zhang, L.; Shan, X.; Cui, J. Integration of super-pixel segmentation and deep-learning methods for evaluating earthquake-damaged buildings using single-phase remote sensing imagery. Int. J. Remote Sens. 2020, 41, 1040–1066. [Google Scholar] [CrossRef]

- Ma, H.; Liu, Y.; Ren, Y.; Yu, J. Detection of Collapsed Buildings in Post-Earthquake Remote Sensing Images Based on the Improved YOLOv3. Remote Sens. 2020, 12, 44. [Google Scholar] [CrossRef] [Green Version]

- Salehi, B.; Ming Zhong, Y.; Dey, V. A review of the effectiveness of spatial information used in urban land cover classification of VHR imagery. Int. J. Geoinform. 2012, 8, 35. [Google Scholar]

- Ma, H.; Liu, Y.; Ren, Y.; Wang, D.; Yu, L.; Yu, J. Improved CNN Classification Method for Groups of Buildings Damaged by Earthquake, Based on High Resolution Remote Sensing Images. Remote Sens. 2020, 12, 260. [Google Scholar] [CrossRef] [Green Version]

- Ji, M.; Liu, L.; Buchroithner, M. Identifying Collapsed Buildings Using Post-Earthquake Satellite Imagery and Convolutional Neural Networks: A Case Study of the 2010 Haiti Earthquake. Remote Sens. 2018, 10, 1689. [Google Scholar] [CrossRef] [Green Version]

- Miura, H.; Aridome, T.; Matsuoka, M. Deep Learning-Based Identification of Collapsed, Non-Collapsed and Blue Tarp-Covered Buildings from Post-Disaster Aerial Images. Remote Sens. 2020, 12, 1924. [Google Scholar] [CrossRef]

- Chen, M.; Wang, X.; Dou, A.; Wu, X. The extraction of post-earthquake building damage information based on convolutional neural network. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 161–165. [Google Scholar] [CrossRef] [Green Version]

- Bai, Y.; Gao, C.; Singh, S.; Koch, M.; Adriano, B.; Mas, E.; Koshimura, S. A framework of rapid regional tsunami damage recognition from post-event TerraSAR-X imagery using deep neural networks. IEEE Geosci. Remote Sens. Lett. 2017, 15, 43–47. [Google Scholar] [CrossRef] [Green Version]

- Sui, H.; Liu, C.; Huang, L.; Hua, L. Application of remote sensing technology in earthquake-induced building damage detection. Geomat. Inf. Sci. Wuhan Univ. 2019, 044, 1008–1019. [Google Scholar]

- Wang, H.; Li, Y. Object-oriented damage building extraction. Remote Sens. Inf. 2011, 8, 81–85. [Google Scholar]

- Adriano, B.; Xia, J.; Baier, G.; Yokoya, N.; Koshimura, S. Multi-Source Data Fusion Based on Ensemble Learning for Rapid Building Damage Mapping during the 2018 Sulawesi Earthquake and Tsunami in Palu, Indonesia. Remote Sens. 2019, 11, 886. [Google Scholar] [CrossRef] [Green Version]

- Cooner, A.; Shao, Y.; Campbell, J. Detection of Urban Damage Using Remote Sensing and Machine Learning Algorithms: Revisiting the 2010 Haiti Earthquake. Remote Sens. 2016, 8, 868. [Google Scholar] [CrossRef] [Green Version]

- Janalipour, M.; Mohammadzadeh, A. A Fuzzy-GA Based Decision Making System for Detecting Damaged Buildings from High-Spatial Resolution Optical Images. Remote Sens. 2017, 9, 349. [Google Scholar] [CrossRef] [Green Version]

- Wieland, M.; Liu, W.; Yamazaki, F. Learning Change from Synthetic Aperture Radar Images: Performance Evaluation of a Support Vector Machine to Detect Earthquake and Tsunami-Induced Changes. Remote Sens. 2016, 8, 792. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Harirchian, E.; Lahmer, T.; Kumari, V.; Jadhav, K. Application of Support Vector Machine Modeling for the Rapid Seismic Hazard Safety Evaluation of Existing Buildings. Energies 2020, 13, 3340. [Google Scholar] [CrossRef]

- Harirchian, E.; Kumari, V.; Jadhav, K.; Raj Das, R.; Rasulzade, S.; Lahmer, T. A Machine Learning Framework for Assessing Seismic Hazard Safety of Reinforced Concrete Buildings. Appl. Sci. 2020, 10, 7153. [Google Scholar] [CrossRef]

- Roeslin, S.; Ma, Q.; Juárez-Garcia, H.; Gómez-Bernal, A.; Wicker, J.; Wotherspoon, L. A machine learning damage prediction model for the 2017 Puebla-Morelos, Mexico, earthquake. Earthq. Spectra 2020, 36, 314–339. [Google Scholar] [CrossRef]

- Harirchian, E.; Lahmer, T. Developing a hierarchical type-2 fuzzy logic model to improve rapid evaluation of earthquake hazard safety of existing buildings. Structures 2020, 28, 1384–1399. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Nex, F.; Vosselman, G. Disaster damage detection through synergistic use of deep learning and 3D point cloud features derived from very high resolution oblique aerial images, and multiple-kernel-learning. ISPRS J. Photogramm. Remote Sens. 2018, 140, 45–59. [Google Scholar] [CrossRef]

- Bhuiyan, M.A.E.; Witharana, C.; Liljedahl, A.K. Use of Very High Spatial Resolution Commercial Satellite Imagery and Deep Learning to Automatically Map Ice-Wedge Polygons across Tundra Vegetation Types. J. Imaging 2020, 6, 137. [Google Scholar] [CrossRef]

- Xu, Z.; Zhang, W.; Zhang, T.; Li, J. HRCNet: High-Resolution Context Extraction Network for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2020, 13, 71. [Google Scholar] [CrossRef]

- Zhang, S.; Li, C.; Qiu, S.; Gao, C.; Zhang, F.; Du, Z.; Liu, R. EMMCNN: An ETPS-Based Multi-Scale and Multi-Feature Method Using CNN for High Spatial Resolution Image Land-Cover Classification. Remote Sens. 2019, 12, 66. [Google Scholar] [CrossRef] [Green Version]

- Bai, Y.; Hu, J.; Su, J.; Liu, X.; Liu, H.; He, X.; Meng, S.; Mas, E.; Koshimura, S. Pyramid Pooling Module-Based Semi-Siamese Network: A Benchmark Model for Assessing Building Damage from xBD Satellite Imagery Datasets. Remote Sens. 2020, 12, 4055. [Google Scholar] [CrossRef]

- Ji, M.; Liu, L.; Du, R.; Buchroithner, M.F. A comparative study of texture and convolutional neural network features for detecting collapsed buildings after earthquakes using pre-and post-event satellite imagery. Remote Sens. 2019, 11, 1202. [Google Scholar] [CrossRef] [Green Version]

- Ji, M.; Liu, L.; Zhang, R.; Buchroithner, M.F. Discrimination of Earthquake-Induced Building Destruction from Space Using a Pretrained CNN Model. Appl. Ences 2020, 10, 602. [Google Scholar] [CrossRef] [Green Version]

- Wheeler, B.J.; Karimi, H.A. Deep Learning-Enabled Semantic Inference of Individual Building Damage Magnitude from Satellite Images. Algorithms 2020, 13, 195. [Google Scholar] [CrossRef]

- Amirkolaee, H.A.; Arefi, H. CNN-based estimation of pre- and post-earthquake height models from single optical images for identification of collapsed buildings. Remote Sens. Lett. 2019, 10, 679–688. [Google Scholar] [CrossRef]

- Nex, F.; Duarte, D.; Tonolo, F.G.; Kerle, N. Structural building damage detection with deep learning: Assessment of a state-of-the-art cnn in operational conditions. Remote Sens. 2019, 11, 2765. [Google Scholar] [CrossRef] [Green Version]

- Valentijn, T.; Margutti, J.; van den Homberg, M.; Laaksonen, J. Multi-hazard and spatial transferability of a cnn for automated building damage assessment. Remote Sens. 2020, 12, 2839. [Google Scholar] [CrossRef]

- Su, J.; Bai, Y.; Wang, X.; Lu, D.; Zhao, B.; Yang, H.; Mas, E.; Koshimura, S. Technical Solution Discussion for Key Challenges of Operational Convolutional Neural Network-Based Building-Damage Assessment from Satellite Imagery: Perspective from Benchmark xBD Dataset. Remote Sens. 2020, 12, 3808. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep feature extraction and classification of hyperspectral images based on convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Hu, W.; Dong, H.; Zhang, X. Building damage detection from post-event aerial imagery using single shot multibox detector. Appl. Sci. 2019, 9, 1128. [Google Scholar] [CrossRef] [Green Version]

- Benson, V.; Ecker, A. Assessing out-of-domain generalization for robust building damage detection. arXiv 2020, arXiv:2011.10328. [Google Scholar]

- Gupta, R.; Hosfelt, R.; Sajeev, S.; Patel, N.; Goodman, B.; Doshi, J.; Heim, E.; Choset, H.; Gaston, M. xBD: A Dataset for Assessing Building Damage from Satellite Imagery. arXiv 2019, arXiv:1911.09296. [Google Scholar]

- Fan, X.; Juang, C.H.; Wasowski, J.; Huang, R.; Xu, Q.; Scaringi, G.; van Westen, C.J.; Havenith, H.-B. What we have learned from the 2008 Wenchuan Earthquake and its aftermath: A decade of research and challenges. Eng. Geol. 2018, 241, 25–32. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Iandola, F.; Moskewicz, M.; Karayev, S.; Girshick, R.; Darrell, T.; Keutzer, K. Densenet: Implementing efficient convnet descriptor pyramids. arXiv 2014, arXiv:1404.1869. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tajbakhsh, N.; Shin, J.Y.; Gurudu, S.R.; Hurst, R.T.; Kendall, C.B.; Gotway, M.B.; Liang, J. Convolutional neural networks for medical image analysis: Full training or fine tuning? IEEE Trans. Med Imaging 2016, 35, 1299–1312. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Perez, L.; Wang, J. The effectiveness of data augmentation in image classification using deep learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Chinchor, N.; Sundheim, B.M. MUC-5 evaluation metrics. In Proceedings of the Fifth Message Understanding Conference (MUC-5), Baltimore, MD, USA, 25–27 August 1993. [Google Scholar]

- McHugh, M.L. Interrater reliability: The kappa statistic. Biochem. Med. 2012, 22, 276–282. [Google Scholar] [CrossRef]

| Dataset | Number of Samples | |||||

|---|---|---|---|---|---|---|

| No. 1 | Minor. 2 | Major. 3 | Destroyed | Total | ||

| xBD | 165,844 | 17,929 | 14,173 | 17,634 | 215,580 | |

| Wenchuan | T1 | 15 | 15 | 5 | 35 | |

| T2 | 9 | 8 | 3 | 20 | ||

| T3 | 11 | 10 | 10 | 31 | ||

| T4 | 4 | 1 | 10 | 15 | ||

| T5 | 5 | 3 | 5 | 13 | ||

| T6 | 3 | 5 | 8 | 16 | ||

| Beyond T1–T6 | - | 261 | 534 | 795 | ||

| Beichuan | 204 | 36 | 117 | 357 | ||

| HPC Resource | NVIDIA GTX 1080ti GPU |

| DL Framework | Keras 2.3.1, Tensorflow 2.3.1 |

| Compiler | Jupyter Notebook 6.0.3 |

| Program | Python 3.7.0 |

| Optimizer | Adam |

| Loss Function | Cross-entropy |

| Learning rate | 0.0001 |

| Batch size | 32 |

| Dataset | Number of Samples | ||||||

|---|---|---|---|---|---|---|---|

| Training | Validation | Testing | |||||

| No | Damage | No | Damage | No | Damage | ||

| xBD | 29,636 | 28,626 | 1647 | 1590 | 1646 | 1591 | |

| Wenchuan | T1 | - | 15 | 20 | |||

| T2 | 9 | 11 | |||||

| T3 | 11 | 20 | |||||

| T4 | 4 | 11 | |||||

| T5 | 5 | 8 | |||||

| T6 | 3 | 13 | |||||

| Beyond T1–T6 | - | - | 795 | ||||

| Beichuan | - | - | - | 25 | 19 | 26 | 19 |

| S1 | 25 | 19 | 25 | 19 | 26 | 19 | |

| S1,2 | 51 | 38 | 25 | 19 | 26 | 19 | |

| S1,2,3 | 76 | 57 | 25 | 19 | 26 | 19 | |

| S1,2,3,4 | 102 | 76 | 25 | 19 | 26 | 19 | |

| S1,2,3,4,5 | 127 | 96 | 25 | 19 | 26 | 19 | |

| S1,2,3,4,5,6 1 | 153 | 115 | 25 | 19 | 26 | 19 | |

| Network | Type | Acc. 1 (%) | F1 | Recall | Precision | Kappa | ||

|---|---|---|---|---|---|---|---|---|

| No. 2 | Damage | No. 2 | Damage | |||||

| VGG-16 | CNN-F | 74.7 | 0.746 | 73.8 | 75.6 | 75.8 | 73.6 | 0.49 |

| CNN-T | 83.6 | 0.825 | 88.3 | 78.6 | 81.0 | 86.7 | 0.67 | |

| Inception V3 | CNN-F | 62.0 | 0.622 | 60.4 | 63.7 | 63.2 | 60.8 | 0.24 |

| CNN-T | 80.3 | 0.789 | 85.2 | 75.1 | 78.0 | 83.1 | 0.60 | |

| ResNet50 | CNN-F | 54.8 | 0.530 | 35.2 | 75.1 | 59.4 | 52.8 | 0.1 |

| CNN-T | 63.5 | 0.523 | 95.0 | 37.3 | 61.0 | 87.0 | 0.33 | |

| DenseNet121 | CNN-F | 68.2 | 0.712 | 56.8 | 80.0 | 74.6 | 64.2 | 0.37 |

| CNN-T | 82.1 | 0.805 | 78.7 | 75.1 | 88.9 | 86.8 | 0.64 | |

| Network | Fine-Tune Sample | Test Accuracy (%) | Test F1 Score | Recall | Kappa | |

|---|---|---|---|---|---|---|

| No Damage (%) | Damage (%) | |||||

| DenseNet121 | - | 64.3 | 0.778 | 100 | 47.4 | 0.51 |

| S1 | 82.2 | 0.8 | 80.8 | 84.2 | 0.64 | |

| S1,2 | 86.5 | 0.889 | 92.3 | 84.2 | 0.77 | |

| S1,2,3 | 82.1 | 0.844 | 84.6 | 84.2 | 0.68 | |

| S1,2,3,4 | 91.1 | 0.889 | 96.2 | 84.2 | 0.82 | |

| S1,2,3,4,5 | 88.9 | 0.857 | 96.2 | 78.9 | 0.77 | |

| S1,2,3,4,5,6 | 88.9 | 0.872 | 88.5 | 89.5 | 0.87 | |

| (a) xBD-Test | |||||||||

| Model | Balance Method | Recall (%) | Precision (%) | Accuracy (%) | F1 | Kappa | |||

| No. 1 | Damage | No. | Damage | ||||||

| DenseNet121 | -- | 92.0 | 38.7 | 60.8 | 82.4 | 65.8 | 0.527 | 0.31 | |

| Down-sampling | 88.9 | 75.1 | 78.7 | 86.8 | 82.1 | 0.805 | 0.64 | ||

| Cost-sensitive | 59.5 | 87.6 | 83.2 | 67.7 | 73.3 | 0.763 | 0.47 | ||

| (b) Wenchuan | |||||||||

| Network | Balance Method | Recall (%) | Precision (%) | T1-T6 Acc. 2(%) | T1-T6 F1 | T1-T6 Kappa | |||

| T1-T6 | Beyond T1-T6 | T1-T6 | |||||||

| No. | Damage | Damage | No. | Damage | |||||

| DenseNet121 | -- | 77.5 | 82.9 | 77.5 | 90.8 | 63.0 | 79.2 | 0.716 | 0.56 |

| Down-sampling | 68.5 | 95.1 | 91.1 | 96.8 | 58.2 | 76.9 | 0.722 | 0.54 | |

| Cost-sensitive | 17.9 | 1 | 99.5 | 36.0 | 43.8 | 43.8 | 0.529 | 0.12 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, W.; Zhang, X.; Luo, P. Transferability of Convolutional Neural Network Models for Identifying Damaged Buildings Due to Earthquake. Remote Sens. 2021, 13, 504. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13030504

Yang W, Zhang X, Luo P. Transferability of Convolutional Neural Network Models for Identifying Damaged Buildings Due to Earthquake. Remote Sensing. 2021; 13(3):504. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13030504

Chicago/Turabian StyleYang, Wanting, Xianfeng Zhang, and Peng Luo. 2021. "Transferability of Convolutional Neural Network Models for Identifying Damaged Buildings Due to Earthquake" Remote Sensing 13, no. 3: 504. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13030504